- En español – ExME

- Em português – EME

An introduction to different types of study design

Posted on 6th April 2021 by Hadi Abbas

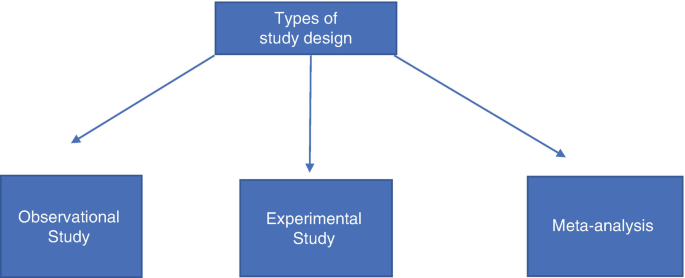

Study designs are the set of methods and procedures used to collect and analyze data in a study.

Broadly speaking, there are 2 types of study designs: descriptive studies and analytical studies.

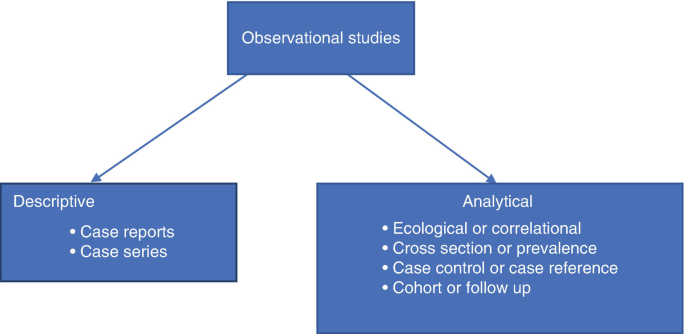

Descriptive studies

- Describes specific characteristics in a population of interest

- The most common forms are case reports and case series

- In a case report, we discuss our experience with the patient’s symptoms, signs, diagnosis, and treatment

- In a case series, several patients with similar experiences are grouped.

Analytical Studies

Analytical studies are of 2 types: observational and experimental.

Observational studies are studies that we conduct without any intervention or experiment. In those studies, we purely observe the outcomes. On the other hand, in experimental studies, we conduct experiments and interventions.

Observational studies

Observational studies include many subtypes. Below, I will discuss the most common designs.

Cross-sectional study:

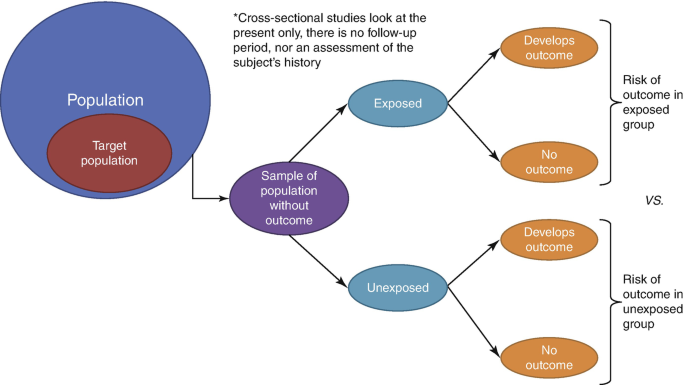

- This design is transverse where we take a specific sample at a specific time without any follow-up

- It allows us to calculate the frequency of disease ( p revalence ) or the frequency of a risk factor

- This design is easy to conduct

- For example – if we want to know the prevalence of migraine in a population, we can conduct a cross-sectional study whereby we take a sample from the population and calculate the number of patients with migraine headaches.

Cohort study:

- We conduct this study by comparing two samples from the population: one sample with a risk factor while the other lacks this risk factor

- It shows us the risk of developing the disease in individuals with the risk factor compared to those without the risk factor ( RR = relative risk )

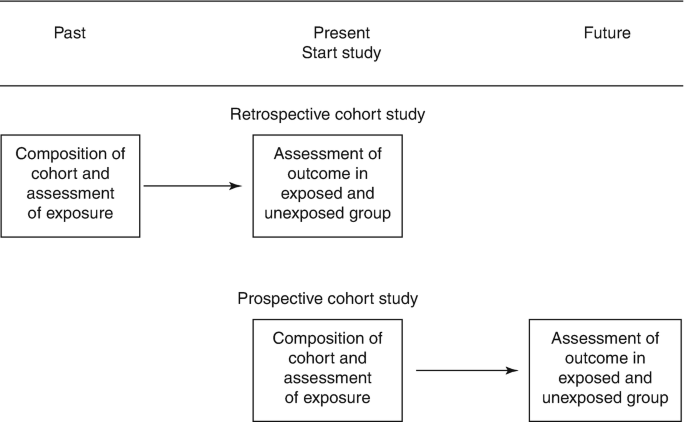

- Prospective : we follow the individuals in the future to know who will develop the disease

- Retrospective : we look to the past to know who developed the disease (e.g. using medical records)

- This design is the strongest among the observational studies

- For example – to find out the relative risk of developing chronic obstructive pulmonary disease (COPD) among smokers, we take a sample including smokers and non-smokers. Then, we calculate the number of individuals with COPD among both.

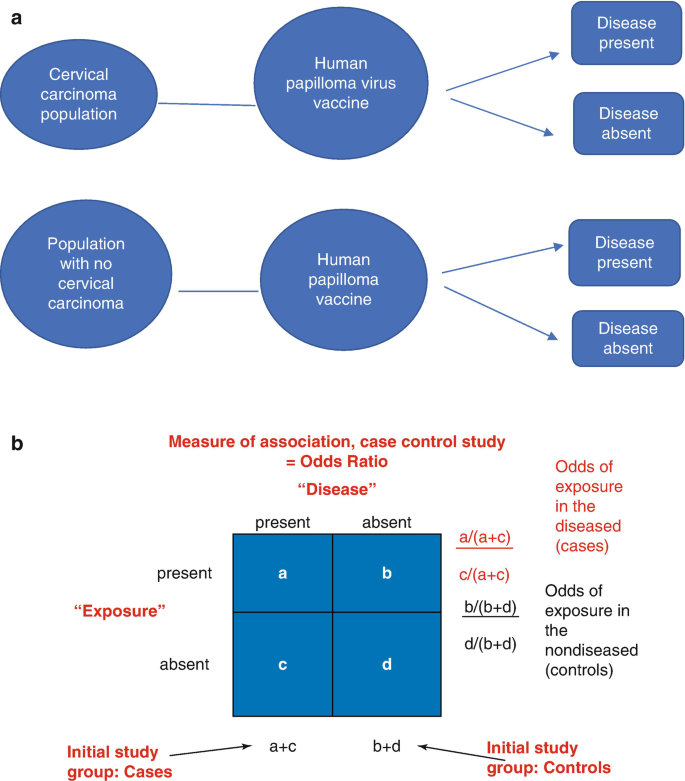

Case-Control Study:

- We conduct this study by comparing 2 groups: one group with the disease (cases) and another group without the disease (controls)

- This design is always retrospective

- We aim to find out the odds of having a risk factor or an exposure if an individual has a specific disease (Odds ratio)

- Relatively easy to conduct

- For example – we want to study the odds of being a smoker among hypertensive patients compared to normotensive ones. To do so, we choose a group of patients diagnosed with hypertension and another group that serves as the control (normal blood pressure). Then we study their smoking history to find out if there is a correlation.

Experimental Studies

- Also known as interventional studies

- Can involve animals and humans

- Pre-clinical trials involve animals

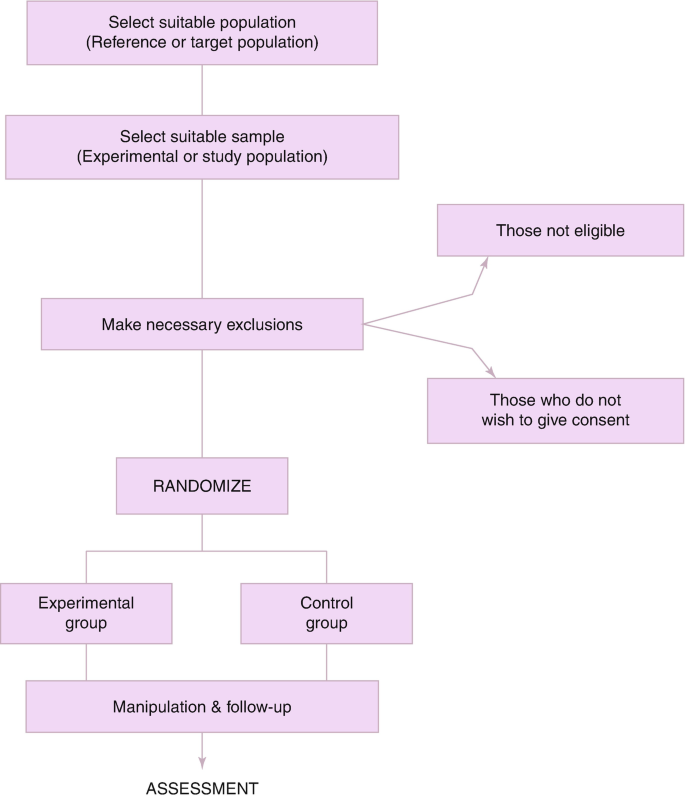

- Clinical trials are experimental studies involving humans

- In clinical trials, we study the effect of an intervention compared to another intervention or placebo. As an example, I have listed the four phases of a drug trial:

I: We aim to assess the safety of the drug ( is it safe ? )

II: We aim to assess the efficacy of the drug ( does it work ? )

III: We want to know if this drug is better than the old treatment ( is it better ? )

IV: We follow-up to detect long-term side effects ( can it stay in the market ? )

- In randomized controlled trials, one group of participants receives the control, while the other receives the tested drug/intervention. Those studies are the best way to evaluate the efficacy of a treatment.

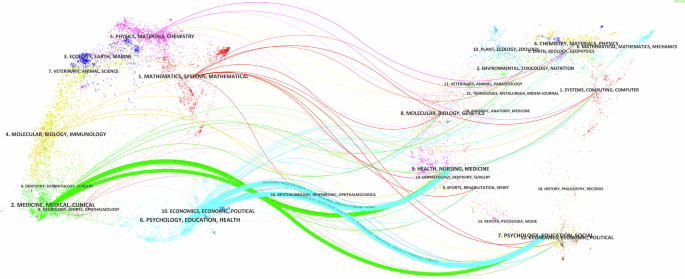

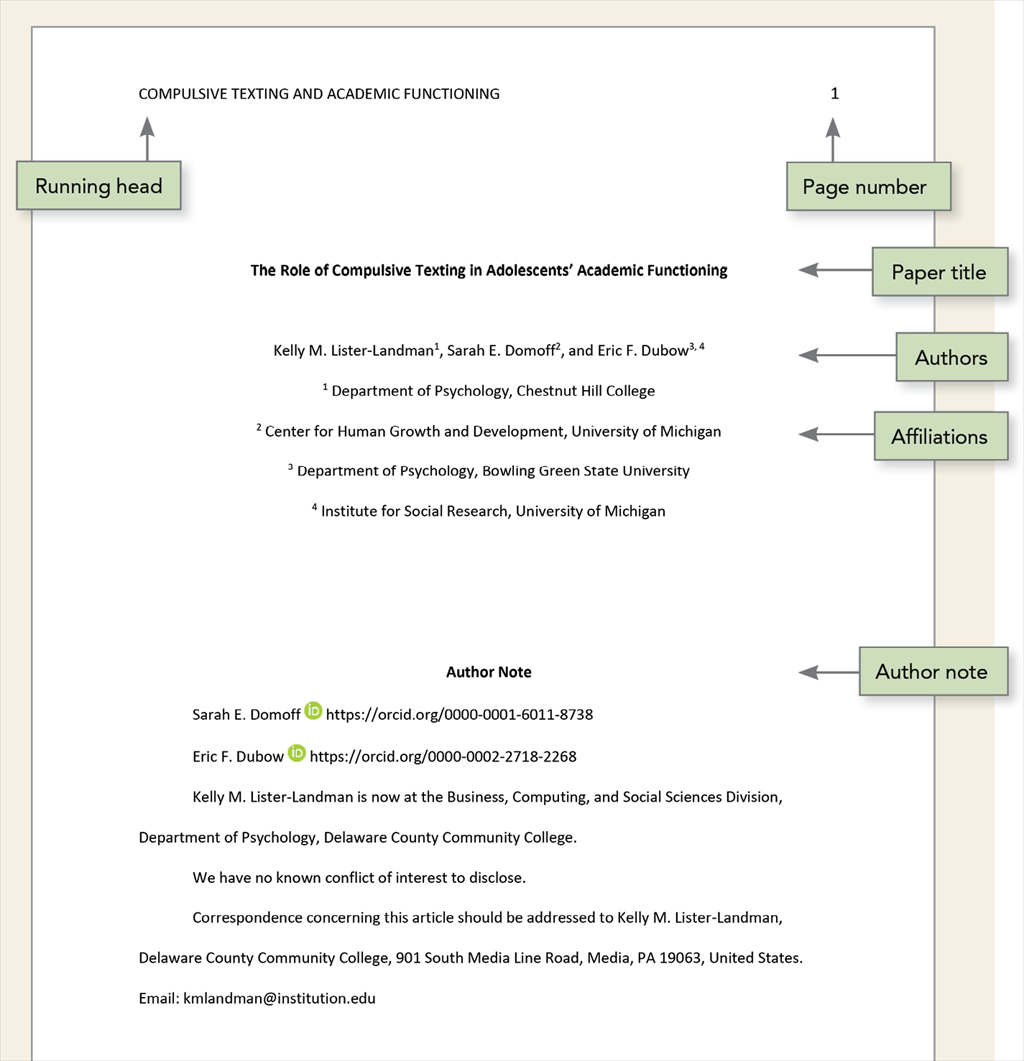

Finally, the figure below will help you with your understanding of different types of study designs.

References (pdf)

You may also be interested in the following blogs for further reading:

An introduction to randomized controlled trials

Case-control and cohort studies: a brief overview

Cohort studies: prospective and retrospective designs

Prevalence vs Incidence: what is the difference?

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

No Comments on An introduction to different types of study design

you are amazing one!! if I get you I’m working with you! I’m student from Ethiopian higher education. health sciences student

Very informative and easy understandable

You are my kind of doctor. Do not lose sight of your objective.

Wow very erll explained and easy to understand

I’m Khamisu Habibu community health officer student from Abubakar Tafawa Balewa university teaching hospital Bauchi, Nigeria, I really appreciate your write up and you have make it clear for the learner. thank you

well understood,thank you so much

Well understood…thanks

Simply explained. Thank You.

Thanks a lot for this nice informative article which help me to understand different study designs that I felt difficult before

That’s lovely to hear, Mona, thank you for letting the author know how useful this was. If there are any other particular topics you think would be useful to you, and are not already on the website, please do let us know.

it is very informative and useful.

thank you statistician

Fabulous to hear, thank you John.

Thanks for this information

Thanks so much for this information….I have clearly known the types of study design Thanks

That’s so good to hear, Mirembe, thank you for letting the author know.

Very helpful article!! U have simplified everything for easy understanding

I’m a health science major currently taking statistics for health care workers…this is a challenging class…thanks for the simified feedback.

That’s good to hear this has helped you. Hopefully you will find some of the other blogs useful too. If you see any topics that are missing from the website, please do let us know!

Hello. I liked your presentation, the fact that you ranked them clearly is very helpful to understand for people like me who is a novelist researcher. However, I was expecting to read much more about the Experimental studies. So please direct me if you already have or will one day. Thank you

Dear Ay. My sincere apologies for not responding to your comment sooner. You may find it useful to filter the blogs by the topic of ‘Study design and research methods’ – here is a link to that filter: https://s4be.cochrane.org/blog/topic/study-design/ This will cover more detail about experimental studies. Or have a look on our library page for further resources there – you’ll find that on the ‘Resources’ drop down from the home page.

However, if there are specific things you feel you would like to learn about experimental studies, that are missing from the website, it would be great if you could let me know too. Thank you, and best of luck. Emma

Great job Mr Hadi. I advise you to prepare and study for the Australian Medical Board Exams as soon as you finish your undergrad study in Lebanon. Good luck and hope we can meet sometime in the future. Regards ;)

You have give a good explaination of what am looking for. However, references am not sure of where to get them from.

Subscribe to our newsletter

You will receive our monthly newsletter and free access to Trip Premium.

Related Articles

Cluster Randomized Trials: Concepts

This blog summarizes the concepts of cluster randomization, and the logistical and statistical considerations while designing a cluster randomized controlled trial.

Expertise-based Randomized Controlled Trials

This blog summarizes the concepts of Expertise-based randomized controlled trials with a focus on the advantages and challenges associated with this type of study.

A well-designed cohort study can provide powerful results. This blog introduces prospective and retrospective cohort studies, discussing the advantages, disadvantages and use of these type of study designs.

- Privacy Policy

Home » Research Design – Types, Methods and Examples

Research Design – Types, Methods and Examples

Table of Contents

Research Design

Definition:

Research design refers to the overall strategy or plan for conducting a research study. It outlines the methods and procedures that will be used to collect and analyze data, as well as the goals and objectives of the study. Research design is important because it guides the entire research process and ensures that the study is conducted in a systematic and rigorous manner.

Types of Research Design

Types of Research Design are as follows:

Descriptive Research Design

This type of research design is used to describe a phenomenon or situation. It involves collecting data through surveys, questionnaires, interviews, and observations. The aim of descriptive research is to provide an accurate and detailed portrayal of a particular group, event, or situation. It can be useful in identifying patterns, trends, and relationships in the data.

Correlational Research Design

Correlational research design is used to determine if there is a relationship between two or more variables. This type of research design involves collecting data from participants and analyzing the relationship between the variables using statistical methods. The aim of correlational research is to identify the strength and direction of the relationship between the variables.

Experimental Research Design

Experimental research design is used to investigate cause-and-effect relationships between variables. This type of research design involves manipulating one variable and measuring the effect on another variable. It usually involves randomly assigning participants to groups and manipulating an independent variable to determine its effect on a dependent variable. The aim of experimental research is to establish causality.

Quasi-experimental Research Design

Quasi-experimental research design is similar to experimental research design, but it lacks one or more of the features of a true experiment. For example, there may not be random assignment to groups or a control group. This type of research design is used when it is not feasible or ethical to conduct a true experiment.

Case Study Research Design

Case study research design is used to investigate a single case or a small number of cases in depth. It involves collecting data through various methods, such as interviews, observations, and document analysis. The aim of case study research is to provide an in-depth understanding of a particular case or situation.

Longitudinal Research Design

Longitudinal research design is used to study changes in a particular phenomenon over time. It involves collecting data at multiple time points and analyzing the changes that occur. The aim of longitudinal research is to provide insights into the development, growth, or decline of a particular phenomenon over time.

Structure of Research Design

The format of a research design typically includes the following sections:

- Introduction : This section provides an overview of the research problem, the research questions, and the importance of the study. It also includes a brief literature review that summarizes previous research on the topic and identifies gaps in the existing knowledge.

- Research Questions or Hypotheses: This section identifies the specific research questions or hypotheses that the study will address. These questions should be clear, specific, and testable.

- Research Methods : This section describes the methods that will be used to collect and analyze data. It includes details about the study design, the sampling strategy, the data collection instruments, and the data analysis techniques.

- Data Collection: This section describes how the data will be collected, including the sample size, data collection procedures, and any ethical considerations.

- Data Analysis: This section describes how the data will be analyzed, including the statistical techniques that will be used to test the research questions or hypotheses.

- Results : This section presents the findings of the study, including descriptive statistics and statistical tests.

- Discussion and Conclusion : This section summarizes the key findings of the study, interprets the results, and discusses the implications of the findings. It also includes recommendations for future research.

- References : This section lists the sources cited in the research design.

Example of Research Design

An Example of Research Design could be:

Research question: Does the use of social media affect the academic performance of high school students?

Research design:

- Research approach : The research approach will be quantitative as it involves collecting numerical data to test the hypothesis.

- Research design : The research design will be a quasi-experimental design, with a pretest-posttest control group design.

- Sample : The sample will be 200 high school students from two schools, with 100 students in the experimental group and 100 students in the control group.

- Data collection : The data will be collected through surveys administered to the students at the beginning and end of the academic year. The surveys will include questions about their social media usage and academic performance.

- Data analysis : The data collected will be analyzed using statistical software. The mean scores of the experimental and control groups will be compared to determine whether there is a significant difference in academic performance between the two groups.

- Limitations : The limitations of the study will be acknowledged, including the fact that social media usage can vary greatly among individuals, and the study only focuses on two schools, which may not be representative of the entire population.

- Ethical considerations: Ethical considerations will be taken into account, such as obtaining informed consent from the participants and ensuring their anonymity and confidentiality.

How to Write Research Design

Writing a research design involves planning and outlining the methodology and approach that will be used to answer a research question or hypothesis. Here are some steps to help you write a research design:

- Define the research question or hypothesis : Before beginning your research design, you should clearly define your research question or hypothesis. This will guide your research design and help you select appropriate methods.

- Select a research design: There are many different research designs to choose from, including experimental, survey, case study, and qualitative designs. Choose a design that best fits your research question and objectives.

- Develop a sampling plan : If your research involves collecting data from a sample, you will need to develop a sampling plan. This should outline how you will select participants and how many participants you will include.

- Define variables: Clearly define the variables you will be measuring or manipulating in your study. This will help ensure that your results are meaningful and relevant to your research question.

- Choose data collection methods : Decide on the data collection methods you will use to gather information. This may include surveys, interviews, observations, experiments, or secondary data sources.

- Create a data analysis plan: Develop a plan for analyzing your data, including the statistical or qualitative techniques you will use.

- Consider ethical concerns : Finally, be sure to consider any ethical concerns related to your research, such as participant confidentiality or potential harm.

When to Write Research Design

Research design should be written before conducting any research study. It is an important planning phase that outlines the research methodology, data collection methods, and data analysis techniques that will be used to investigate a research question or problem. The research design helps to ensure that the research is conducted in a systematic and logical manner, and that the data collected is relevant and reliable.

Ideally, the research design should be developed as early as possible in the research process, before any data is collected. This allows the researcher to carefully consider the research question, identify the most appropriate research methodology, and plan the data collection and analysis procedures in advance. By doing so, the research can be conducted in a more efficient and effective manner, and the results are more likely to be valid and reliable.

Purpose of Research Design

The purpose of research design is to plan and structure a research study in a way that enables the researcher to achieve the desired research goals with accuracy, validity, and reliability. Research design is the blueprint or the framework for conducting a study that outlines the methods, procedures, techniques, and tools for data collection and analysis.

Some of the key purposes of research design include:

- Providing a clear and concise plan of action for the research study.

- Ensuring that the research is conducted ethically and with rigor.

- Maximizing the accuracy and reliability of the research findings.

- Minimizing the possibility of errors, biases, or confounding variables.

- Ensuring that the research is feasible, practical, and cost-effective.

- Determining the appropriate research methodology to answer the research question(s).

- Identifying the sample size, sampling method, and data collection techniques.

- Determining the data analysis method and statistical tests to be used.

- Facilitating the replication of the study by other researchers.

- Enhancing the validity and generalizability of the research findings.

Applications of Research Design

There are numerous applications of research design in various fields, some of which are:

- Social sciences: In fields such as psychology, sociology, and anthropology, research design is used to investigate human behavior and social phenomena. Researchers use various research designs, such as experimental, quasi-experimental, and correlational designs, to study different aspects of social behavior.

- Education : Research design is essential in the field of education to investigate the effectiveness of different teaching methods and learning strategies. Researchers use various designs such as experimental, quasi-experimental, and case study designs to understand how students learn and how to improve teaching practices.

- Health sciences : In the health sciences, research design is used to investigate the causes, prevention, and treatment of diseases. Researchers use various designs, such as randomized controlled trials, cohort studies, and case-control studies, to study different aspects of health and healthcare.

- Business : Research design is used in the field of business to investigate consumer behavior, marketing strategies, and the impact of different business practices. Researchers use various designs, such as survey research, experimental research, and case studies, to study different aspects of the business world.

- Engineering : In the field of engineering, research design is used to investigate the development and implementation of new technologies. Researchers use various designs, such as experimental research and case studies, to study the effectiveness of new technologies and to identify areas for improvement.

Advantages of Research Design

Here are some advantages of research design:

- Systematic and organized approach : A well-designed research plan ensures that the research is conducted in a systematic and organized manner, which makes it easier to manage and analyze the data.

- Clear objectives: The research design helps to clarify the objectives of the study, which makes it easier to identify the variables that need to be measured, and the methods that need to be used to collect and analyze data.

- Minimizes bias: A well-designed research plan minimizes the chances of bias, by ensuring that the data is collected and analyzed objectively, and that the results are not influenced by the researcher’s personal biases or preferences.

- Efficient use of resources: A well-designed research plan helps to ensure that the resources (time, money, and personnel) are used efficiently and effectively, by focusing on the most important variables and methods.

- Replicability: A well-designed research plan makes it easier for other researchers to replicate the study, which enhances the credibility and reliability of the findings.

- Validity: A well-designed research plan helps to ensure that the findings are valid, by ensuring that the methods used to collect and analyze data are appropriate for the research question.

- Generalizability : A well-designed research plan helps to ensure that the findings can be generalized to other populations, settings, or situations, which increases the external validity of the study.

Research Design Vs Research Methodology

| Research Design | Research Methodology |

|---|---|

| The plan and structure for conducting research that outlines the procedures to be followed to collect and analyze data. | The set of principles, techniques, and tools used to carry out the research plan and achieve research objectives. |

| Describes the overall approach and strategy used to conduct research, including the type of data to be collected, the sources of data, and the methods for collecting and analyzing data. | Refers to the techniques and methods used to gather, analyze and interpret data, including sampling techniques, data collection methods, and data analysis techniques. |

| Helps to ensure that the research is conducted in a systematic, rigorous, and valid way, so that the results are reliable and can be used to make sound conclusions. | Includes a set of procedures and tools that enable researchers to collect and analyze data in a consistent and valid manner, regardless of the research design used. |

| Common research designs include experimental, quasi-experimental, correlational, and descriptive studies. | Common research methodologies include qualitative, quantitative, and mixed-methods approaches. |

| Determines the overall structure of the research project and sets the stage for the selection of appropriate research methodologies. | Guides the researcher in selecting the most appropriate research methods based on the research question, research design, and other contextual factors. |

| Helps to ensure that the research project is feasible, relevant, and ethical. | Helps to ensure that the data collected is accurate, valid, and reliable, and that the research findings can be interpreted and generalized to the population of interest. |

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Research Methodology – Types, Examples and...

Purpose of Research – Objectives and Applications

Research Paper Conclusion – Writing Guide and...

Research Paper Title – Writing Guide and Example

Significance of the Study – Examples and Writing...

Conceptual Framework – Types, Methodology and...

Leave a comment x.

Save my name, email, and website in this browser for the next time I comment.

Research Design 101

Everything You Need To Get Started (With Examples)

By: Derek Jansen (MBA) | Reviewers: Eunice Rautenbach (DTech) & Kerryn Warren (PhD) | April 2023

Navigating the world of research can be daunting, especially if you’re a first-time researcher. One concept you’re bound to run into fairly early in your research journey is that of “ research design ”. Here, we’ll guide you through the basics using practical examples , so that you can approach your research with confidence.

Overview: Research Design 101

What is research design.

- Research design types for quantitative studies

- Video explainer : quantitative research design

- Research design types for qualitative studies

- Video explainer : qualitative research design

- How to choose a research design

- Key takeaways

Research design refers to the overall plan, structure or strategy that guides a research project , from its conception to the final data analysis. A good research design serves as the blueprint for how you, as the researcher, will collect and analyse data while ensuring consistency, reliability and validity throughout your study.

Understanding different types of research designs is essential as helps ensure that your approach is suitable given your research aims, objectives and questions , as well as the resources you have available to you. Without a clear big-picture view of how you’ll design your research, you run the risk of potentially making misaligned choices in terms of your methodology – especially your sampling , data collection and data analysis decisions.

The problem with defining research design…

One of the reasons students struggle with a clear definition of research design is because the term is used very loosely across the internet, and even within academia.

Some sources claim that the three research design types are qualitative, quantitative and mixed methods , which isn’t quite accurate (these just refer to the type of data that you’ll collect and analyse). Other sources state that research design refers to the sum of all your design choices, suggesting it’s more like a research methodology . Others run off on other less common tangents. No wonder there’s confusion!

In this article, we’ll clear up the confusion. We’ll explain the most common research design types for both qualitative and quantitative research projects, whether that is for a full dissertation or thesis, or a smaller research paper or article.

Research Design: Quantitative Studies

Quantitative research involves collecting and analysing data in a numerical form. Broadly speaking, there are four types of quantitative research designs: descriptive , correlational , experimental , and quasi-experimental .

Descriptive Research Design

As the name suggests, descriptive research design focuses on describing existing conditions, behaviours, or characteristics by systematically gathering information without manipulating any variables. In other words, there is no intervention on the researcher’s part – only data collection.

For example, if you’re studying smartphone addiction among adolescents in your community, you could deploy a survey to a sample of teens asking them to rate their agreement with certain statements that relate to smartphone addiction. The collected data would then provide insight regarding how widespread the issue may be – in other words, it would describe the situation.

The key defining attribute of this type of research design is that it purely describes the situation . In other words, descriptive research design does not explore potential relationships between different variables or the causes that may underlie those relationships. Therefore, descriptive research is useful for generating insight into a research problem by describing its characteristics . By doing so, it can provide valuable insights and is often used as a precursor to other research design types.

Correlational Research Design

Correlational design is a popular choice for researchers aiming to identify and measure the relationship between two or more variables without manipulating them . In other words, this type of research design is useful when you want to know whether a change in one thing tends to be accompanied by a change in another thing.

For example, if you wanted to explore the relationship between exercise frequency and overall health, you could use a correlational design to help you achieve this. In this case, you might gather data on participants’ exercise habits, as well as records of their health indicators like blood pressure, heart rate, or body mass index. Thereafter, you’d use a statistical test to assess whether there’s a relationship between the two variables (exercise frequency and health).

As you can see, correlational research design is useful when you want to explore potential relationships between variables that cannot be manipulated or controlled for ethical, practical, or logistical reasons. It is particularly helpful in terms of developing predictions , and given that it doesn’t involve the manipulation of variables, it can be implemented at a large scale more easily than experimental designs (which will look at next).

That said, it’s important to keep in mind that correlational research design has limitations – most notably that it cannot be used to establish causality . In other words, correlation does not equal causation . To establish causality, you’ll need to move into the realm of experimental design, coming up next…

Need a helping hand?

Experimental Research Design

Experimental research design is used to determine if there is a causal relationship between two or more variables . With this type of research design, you, as the researcher, manipulate one variable (the independent variable) while controlling others (dependent variables). Doing so allows you to observe the effect of the former on the latter and draw conclusions about potential causality.

For example, if you wanted to measure if/how different types of fertiliser affect plant growth, you could set up several groups of plants, with each group receiving a different type of fertiliser, as well as one with no fertiliser at all. You could then measure how much each plant group grew (on average) over time and compare the results from the different groups to see which fertiliser was most effective.

Overall, experimental research design provides researchers with a powerful way to identify and measure causal relationships (and the direction of causality) between variables. However, developing a rigorous experimental design can be challenging as it’s not always easy to control all the variables in a study. This often results in smaller sample sizes , which can reduce the statistical power and generalisability of the results.

Moreover, experimental research design requires random assignment . This means that the researcher needs to assign participants to different groups or conditions in a way that each participant has an equal chance of being assigned to any group (note that this is not the same as random sampling ). Doing so helps reduce the potential for bias and confounding variables . This need for random assignment can lead to ethics-related issues . For example, withholding a potentially beneficial medical treatment from a control group may be considered unethical in certain situations.

Quasi-Experimental Research Design

Quasi-experimental research design is used when the research aims involve identifying causal relations , but one cannot (or doesn’t want to) randomly assign participants to different groups (for practical or ethical reasons). Instead, with a quasi-experimental research design, the researcher relies on existing groups or pre-existing conditions to form groups for comparison.

For example, if you were studying the effects of a new teaching method on student achievement in a particular school district, you may be unable to randomly assign students to either group and instead have to choose classes or schools that already use different teaching methods. This way, you still achieve separate groups, without having to assign participants to specific groups yourself.

Naturally, quasi-experimental research designs have limitations when compared to experimental designs. Given that participant assignment is not random, it’s more difficult to confidently establish causality between variables, and, as a researcher, you have less control over other variables that may impact findings.

All that said, quasi-experimental designs can still be valuable in research contexts where random assignment is not possible and can often be undertaken on a much larger scale than experimental research, thus increasing the statistical power of the results. What’s important is that you, as the researcher, understand the limitations of the design and conduct your quasi-experiment as rigorously as possible, paying careful attention to any potential confounding variables .

Research Design: Qualitative Studies

There are many different research design types when it comes to qualitative studies, but here we’ll narrow our focus to explore the “Big 4”. Specifically, we’ll look at phenomenological design, grounded theory design, ethnographic design, and case study design.

Phenomenological Research Design

Phenomenological design involves exploring the meaning of lived experiences and how they are perceived by individuals. This type of research design seeks to understand people’s perspectives , emotions, and behaviours in specific situations. Here, the aim for researchers is to uncover the essence of human experience without making any assumptions or imposing preconceived ideas on their subjects.

For example, you could adopt a phenomenological design to study why cancer survivors have such varied perceptions of their lives after overcoming their disease. This could be achieved by interviewing survivors and then analysing the data using a qualitative analysis method such as thematic analysis to identify commonalities and differences.

Phenomenological research design typically involves in-depth interviews or open-ended questionnaires to collect rich, detailed data about participants’ subjective experiences. This richness is one of the key strengths of phenomenological research design but, naturally, it also has limitations. These include potential biases in data collection and interpretation and the lack of generalisability of findings to broader populations.

Grounded Theory Research Design

Grounded theory (also referred to as “GT”) aims to develop theories by continuously and iteratively analysing and comparing data collected from a relatively large number of participants in a study. It takes an inductive (bottom-up) approach, with a focus on letting the data “speak for itself”, without being influenced by preexisting theories or the researcher’s preconceptions.

As an example, let’s assume your research aims involved understanding how people cope with chronic pain from a specific medical condition, with a view to developing a theory around this. In this case, grounded theory design would allow you to explore this concept thoroughly without preconceptions about what coping mechanisms might exist. You may find that some patients prefer cognitive-behavioural therapy (CBT) while others prefer to rely on herbal remedies. Based on multiple, iterative rounds of analysis, you could then develop a theory in this regard, derived directly from the data (as opposed to other preexisting theories and models).

Grounded theory typically involves collecting data through interviews or observations and then analysing it to identify patterns and themes that emerge from the data. These emerging ideas are then validated by collecting more data until a saturation point is reached (i.e., no new information can be squeezed from the data). From that base, a theory can then be developed .

As you can see, grounded theory is ideally suited to studies where the research aims involve theory generation , especially in under-researched areas. Keep in mind though that this type of research design can be quite time-intensive , given the need for multiple rounds of data collection and analysis.

Ethnographic Research Design

Ethnographic design involves observing and studying a culture-sharing group of people in their natural setting to gain insight into their behaviours, beliefs, and values. The focus here is on observing participants in their natural environment (as opposed to a controlled environment). This typically involves the researcher spending an extended period of time with the participants in their environment, carefully observing and taking field notes .

All of this is not to say that ethnographic research design relies purely on observation. On the contrary, this design typically also involves in-depth interviews to explore participants’ views, beliefs, etc. However, unobtrusive observation is a core component of the ethnographic approach.

As an example, an ethnographer may study how different communities celebrate traditional festivals or how individuals from different generations interact with technology differently. This may involve a lengthy period of observation, combined with in-depth interviews to further explore specific areas of interest that emerge as a result of the observations that the researcher has made.

As you can probably imagine, ethnographic research design has the ability to provide rich, contextually embedded insights into the socio-cultural dynamics of human behaviour within a natural, uncontrived setting. Naturally, however, it does come with its own set of challenges, including researcher bias (since the researcher can become quite immersed in the group), participant confidentiality and, predictably, ethical complexities . All of these need to be carefully managed if you choose to adopt this type of research design.

Case Study Design

With case study research design, you, as the researcher, investigate a single individual (or a single group of individuals) to gain an in-depth understanding of their experiences, behaviours or outcomes. Unlike other research designs that are aimed at larger sample sizes, case studies offer a deep dive into the specific circumstances surrounding a person, group of people, event or phenomenon, generally within a bounded setting or context .

As an example, a case study design could be used to explore the factors influencing the success of a specific small business. This would involve diving deeply into the organisation to explore and understand what makes it tick – from marketing to HR to finance. In terms of data collection, this could include interviews with staff and management, review of policy documents and financial statements, surveying customers, etc.

While the above example is focused squarely on one organisation, it’s worth noting that case study research designs can have different variation s, including single-case, multiple-case and longitudinal designs. As you can see in the example, a single-case design involves intensely examining a single entity to understand its unique characteristics and complexities. Conversely, in a multiple-case design , multiple cases are compared and contrasted to identify patterns and commonalities. Lastly, in a longitudinal case design , a single case or multiple cases are studied over an extended period of time to understand how factors develop over time.

As you can see, a case study research design is particularly useful where a deep and contextualised understanding of a specific phenomenon or issue is desired. However, this strength is also its weakness. In other words, you can’t generalise the findings from a case study to the broader population. So, keep this in mind if you’re considering going the case study route.

How To Choose A Research Design

Having worked through all of these potential research designs, you’d be forgiven for feeling a little overwhelmed and wondering, “ But how do I decide which research design to use? ”. While we could write an entire post covering that alone, here are a few factors to consider that will help you choose a suitable research design for your study.

Data type: The first determining factor is naturally the type of data you plan to be collecting – i.e., qualitative or quantitative. This may sound obvious, but we have to be clear about this – don’t try to use a quantitative research design on qualitative data (or vice versa)!

Research aim(s) and question(s): As with all methodological decisions, your research aim and research questions will heavily influence your research design. For example, if your research aims involve developing a theory from qualitative data, grounded theory would be a strong option. Similarly, if your research aims involve identifying and measuring relationships between variables, one of the experimental designs would likely be a better option.

Time: It’s essential that you consider any time constraints you have, as this will impact the type of research design you can choose. For example, if you’ve only got a month to complete your project, a lengthy design such as ethnography wouldn’t be a good fit.

Resources: Take into account the resources realistically available to you, as these need to factor into your research design choice. For example, if you require highly specialised lab equipment to execute an experimental design, you need to be sure that you’ll have access to that before you make a decision.

Keep in mind that when it comes to research, it’s important to manage your risks and play as conservatively as possible. If your entire project relies on you achieving a huge sample, having access to niche equipment or holding interviews with very difficult-to-reach participants, you’re creating risks that could kill your project. So, be sure to think through your choices carefully and make sure that you have backup plans for any existential risks. Remember that a relatively simple methodology executed well generally will typically earn better marks than a highly-complex methodology executed poorly.

Recap: Key Takeaways

We’ve covered a lot of ground here. Let’s recap by looking at the key takeaways:

- Research design refers to the overall plan, structure or strategy that guides a research project, from its conception to the final analysis of data.

- Research designs for quantitative studies include descriptive , correlational , experimental and quasi-experimenta l designs.

- Research designs for qualitative studies include phenomenological , grounded theory , ethnographic and case study designs.

- When choosing a research design, you need to consider a variety of factors, including the type of data you’ll be working with, your research aims and questions, your time and the resources available to you.

If you need a helping hand with your research design (or any other aspect of your research), check out our private coaching services .

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

11 Comments

Is there any blog article explaining more on Case study research design? Is there a Case study write-up template? Thank you.

Thanks this was quite valuable to clarify such an important concept.

Thanks for this simplified explanations. it is quite very helpful.

This was really helpful. thanks

Thank you for your explanation. I think case study research design and the use of secondary data in researches needs to be talked about more in your videos and articles because there a lot of case studies research design tailored projects out there.

Please is there any template for a case study research design whose data type is a secondary data on your repository?

This post is very clear, comprehensive and has been very helpful to me. It has cleared the confusion I had in regard to research design and methodology.

This post is helpful, easy to understand, and deconstructs what a research design is. Thanks

This post is really helpful.

how to cite this page

Thank you very much for the post. It is wonderful and has cleared many worries in my mind regarding research designs. I really appreciate .

how can I put this blog as my reference(APA style) in bibliography part?

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Understanding Research Study Designs

Table of Contents

In order to find the best possible evidence, it helps to understand the basic designs of research studies. The following basic definitions and examples of clinical research designs follow the “ levels of evidence.”

Case Series and Case Reports

Case control studies, cohort studies, randomized controlled studies, double-blind method, meta-analyses, systematic reviews.

These consist either of collections of reports on the treatment of individual patients with the same condition, or of reports on a single patient.

- Case series/reports are used to illustrate an aspect of a condition, the treatment or the adverse reaction to treatment.

- Example : You have a patient that has a condition that you are unfamiliar with. You would search for case reports that could help you decide on a direction of treatment or to assist on a diagnosis.

- Case series/reports have no control group (one to compare outcomes), so they have no statistical validity.

- The benefits of case series/reports are that they are easy to understand and can be written up in a very short period of time.

Patients who already have a certain condition are compared with people who do not.

- Case control studies are generally designed to estimate the odds (using an odds ratio) of developing the studied condition/disease. They can determine if there is an associational relationship between condition and risk factor

- Example: A study in which colon cancer patients are asked what kinds of food they have eaten in the past and the answers are compared with a selected control group.

- Case control studies are less reliable than either randomized controlled trials or cohort studies.

- A major drawback to case control studies is that one cannot directly obtain absolute risk (i.e. incidence) of a bad outcome.

- The advantages of case control studies are they can be done quickly and are very efficient for conditions/diseases with rare outcomes.

Also called longitudinal studies, involve a case-defined population who presently have a certain exposure and/or receive a particular treatment that are followed over time and compared with another group who are not affected by the exposure under investigation.

- Cohort studies may be either prospective (i.e., exposure factors are identified at the beginning of a study and a defined population is followed into the future), or historical/retrospective (i.e., past medical records for the defined population are used to identify exposure factors).

- Cohort studies are used to establish causation of a disease or to evaluate the outcome/impact of treatment, when randomized controlled clinical trials are not possible.

- Example: One of the more well-know examples of a cohort study is the Framingham Heart Study, which followed generations of residents of Framingham, Massachusetts.

- Cohort studies are not as reliable as randomized controlled studies, since the two groups may differ in ways other than the variable under study.

- Other problems with cohort studies are that they require a large sample size, are inefficient for rare outcomes, and can take long periods of time.

This is a study in which 1) There are two groups, one treatment group and one control group. The treatment group receives the treatment under investigation, and the control group receives either no treatment (placebo) or standard treatment. 2) Patients are randomly assigned to all groups.

- Randomized controlled trials are considered the “gold standard” in medical research. They lend themselves best to answering questions about the effectiveness of different therapies or interventions.

- Randomization helps avoid the bias in choice of patients-to-treatment that a physician might be subject to. It also increases the probability that differences between the groups can be attributed to the treatment(s) under study.

- Having a control group allows for a comparison of treatments – e.g., treatment A produced favorable results 56% of the time versus treatment B in which only 25% of patients had favorable results.

- There are certain types of questions on which randomized controlled studies cannot be done for ethical reasons, for instance, if patients were asked to undertake harmful experiences (like smoking) or denied any treatment beyond a placebo when there are known effective treatments.

A type of randomized controlled clinical trial/study in which neither medical staff/physician nor the patient knows which of several possible treatments/therapies the patient is receiving.

- Example : Studies of treatments that consist essentially of taking pills are very easy to do double blind – the patient takes one of two pills of identical size, shape, and color, and neither the patient nor the physician needs to know which is which.

- A double blind study is the most rigorous clinical research design because, in addition to the randomization of subjects, which reduces the risk of bias, it can eliminate or minimize the placebo effect which is a further challenge to the validity of a study.

Meta-analysis is a systematic, objective way to combine data from many studies, usually from randomized controlled clinical trials, and arrive at a pooled estimate of treatment effectiveness and statistical significance.

- Meta-analysis can also combine data from case/control and cohort studies. The advantage to merging these data is that it increases sample size and allows for analyses that would not otherwise be possible.

- They should not be confused with reviews of the literature or systematic reviews.

- Two problems with meta-analysis are publication bias (studies showing no effect or little effect are often not published and just “filed” away) and the quality of the design of the studies from which data is pulled. This can lead to misleading results when all the data on the subject from “published” literature are summarized.

A systematic review is a comprehensive survey of a topic that takes great care to find all relevant studies of the highest level of evidence, published and unpublished, assess each study, synthesize the findings from individual studies in an unbiased, explicit and reproducible way and present a balanced and impartial summary of the findings with due consideration of any flaws in the evidence. In this way it can be used for the evaluation of either existing or new technologies and practices.

A systematic review is more rigorous than a traditional literature review and attempts to reduce the influence of bias. In order to do this, a systematic review follows a formal process:

- Clearly formulated research question

- Published & unpublished (conferences, company reports, “file drawer reports”, etc.) literature is carefully searched for relevant research

- Identified research is assessed according to an explicit methodology

- Results of the critical assessment of the individual studies are combined

- Final results are placed in context, addressing such issues are quality of the included studies, impact of bias and the applicability of the findings

- The difference between a systematic review and a meta-analysis is that a systematic review looks at the whole picture (qualitative view), while a meta-analysis looks for the specific statistical picture (quantitative view).

R esearch Process in the Health Sciences (35:37 min): Overview of the scientific research process in the health sciences. Follows the seven steps: defining the problem, reviewing the literature, formulating a hypothesis, choosing a research design, collecting data, analyzing the data and interpretation and report writing. Includes a set of additional readings and library resources.

Research Study Designs in the Health Sciences (29:36 min): An overview of research study designs used by health sciences researchers. Covers case reports/case series, case control studies, cohort studies, correlational studies, cross-sectional studies, experimental studies (including randomized control trials), systematic reviews and meta-analysis. Additional readings and library resources are also provided.

Types of Study Design

- 📖 Geeky Medics OSCE Book

- ⚡ Geeky Medics Bundles

- ✨ 1300+ OSCE Stations

- ✅ OSCE Checklist PDF Booklet

- 🧠 UKMLA AKT Question Bank

- 💊 PSA Question Bank

- 💉 Clinical Skills App

- 🗂️ Flashcard Collections | OSCE , Medicine , Surgery , Anatomy

- 💬 SCA Cases for MRCGP

To be the first to know about our latest videos subscribe to our YouTube channel 🙌

Table of Contents

Suggest an improvement

- Hidden Post Title

- Hidden Post URL

- Hidden Post ID

- Type of issue * N/A Fix spelling/grammar issue Add or fix a link Add or fix an image Add more detail Improve the quality of the writing Fix a factual error

- Please provide as much detail as possible * You don't need to tell us which article this feedback relates to, as we automatically capture that information for you.

- Your Email (optional) This allows us to get in touch for more details if required.

- Which organ is responsible for pumping blood around the body? * Enter a five letter word in lowercase

- Phone This field is for validation purposes and should be left unchanged.

Introduction

Study designs are frameworks used in medical research to gather data and explore a specific research question .

Choosing an appropriate study design is one of many essential considerations before conducting research to minimise bias and yield valid results .

This guide provides a summary of study designs commonly used in medical research, their characteristics, advantages and disadvantages.

Case-report and case-series

A case report is a detailed description of a patient’s medical history, diagnosis, treatment, and outcome. A case report typically documents unusual or rare cases or reports new or unexpected clinical findings .

A case series is a similar study that involves a group of patients sharing a similar disease or condition. A case series involves a comprehensive review of medical records for each patient to identify common features or disease patterns. Case series help better understand a disease’s presentation, diagnosis, and treatment.

While a case report focuses on a single patient, a case series involves a group of patients to provide a broader perspective on a specific disease. Both case reports and case series are important tools for understanding rare or unusual diseases .

Advantages of case series and case reports include:

- Able to describe rare or poorly understood conditions or diseases

- Helpful in generating hypotheses and identifying patterns or trends in patient populations

- Can be conducted relatively quickly and at a lower cost compared to other research designs

Disadvantages

Disadvantages of case series and case reports include:

- Prone to selection bias , meaning that the patients included in the series may not be representative of the general population

- Lack a control group, which makes it difficult to conclude the effectiveness of different treatments or interventions

- They are descriptive and cannot establish causality or control for confounding factors

Cross-sectional study

A cross-sectional study aims to measure the prevalence or frequency of a disease in a population at a specific point in time. In other words, it provides a “ snapshot ” of the population at a single moment in time.

Cross-sectional studies are unique from other study designs in that they collect data on the exposure and the outcome of interest from a sample of individuals in the population. This type of data is used to investigate the distribution of health-related conditions and behaviours in different populations, which is especially useful for guiding the development of public health interventions .

Example of a cross-sectional study

A cross-sectional study might investigate the prevalence of hypertension (the outcome) in a sample of adults in a particular region. The researchers would measure blood pressure levels in each participant and gather information on other factors that could influence blood pressure, such as age, sex, weight, and lifestyle habits (exposure).

Advantages of cross-sectional studies include:

- Relatively quick and inexpensive to conduct compared to other study designs, such as cohort or case-control studies

- They can provide a snapshot of the prevalence and distribution of a particular health condition in a population

- They can help to identify patterns and associations between exposure and outcome variables, which can be used to generate hypotheses for further research

Disadvantages of cross-sectional studies include:

- They cannot establish causality , as they do not follow participants over time and cannot determine the temporal sequence between exposure and outcome

- Prone to selection bias , as the sample may not represent the entire population being studied

- They cannot account for confounding variables , which may affect the relationship between the exposure and outcome of interest

Case-control study

A case-control study compares people who have developed a disease of interest ( cases ) with people who have not developed the disease ( controls ) to identify potential risk factors associated with the disease.

Once cases and controls have been identified, researchers then collect information about related risk factors , such as age, sex, lifestyle factors, or environmental exposures, from individuals. By comparing the prevalence of risk factors between the cases and the controls, researchers can determine the association between the risk factors and the disease.

Example of a case-control study

A case-control study design might involve comparing a group of individuals with lung cancer (cases) to a group of individuals without lung cancer (controls) to assess the association between smoking (risk factor) and the development of lung cancer.

Advantages of case-control studies include:

- Useful for studying rare diseases , as they allow researchers to selectively recruit cases with the disease of interest

- Useful for investigating potential risk factors for a disease, as the researchers can collect data on many different factors from both cases and controls

- Can be helpful in situations where it is not ethical or practical to manipulate exposure levels or randomise study participants

Disadvantages of case-control studies include:

- Prone to selection bias , as the controls may not be representative of the general population or may have different underlying risk factors than the cases

- Cannot establish causality , as they can only identify associations between factors and disease

- May be limited by the availability of suitable controls , as finding appropriate controls who have similar characteristics to the cases can be challenging

Cohort study

A cohort study follows a group of individuals (a cohort) over time to investigate the relationship between an exposure or risk factor and a particular outcome or health condition. Cohort studies can be further classified into prospective or retrospective cohort studies.

Prospective cohort study

A prospective cohort study is a study in which the researchers select a group of individuals who do not have a particular disease or outcome of interest at the start of the study.

They then follow this cohort over time to track the number of patients who develop the outcome . Before the start of the study, information on exposure(s) of interest may also be collected.

Example of a prospective cohort study

A prospective cohort study might follow a group of individuals who have never smoked and measure their exposure to tobacco smoke over time to investigate the relationship between smoking and lung cancer .

Retrospective cohort study

In contrast, a retrospective cohort study is a study in which the researchers select a group of individuals who have already been exposed to something (e.g. smoking) and look back in time (for example, through patient charts) to see if they developed the outcome (e.g. lung cancer ).

The key difference in retrospective cohort studies is that data on exposure and outcome are collected after the outcome has occurred.

Example of a retrospective cohort study

A retrospective cohort study might look at the medical records of smokers and see if they developed a particular adverse event such as lung cancer.

Advantages of cohort studies include:

- Generally considered to be the most appropriate study design for investigating the temporal relationship between exposure and outcome

- Can provide estimates of incidence and relative risk , which are useful for quantifying the strength of the association between exposure and outcome

- Can be used to investigate multiple outcomes or endpoints associated with a particular exposure, which can help to identify unexpected effects or outcomes

Disadvantages of cohort studies include:

- Can be expensive and time-consuming to conduct, particularly for long-term follow-up

- May suffer from selection bias , as the sample may not be representative of the entire population being studied

- May suffer from attrition bias , as participants may drop out or be lost to follow-up over time

Meta-analysis

A meta-analysis is a type of study that involves extracting outcome data from all relevant studies in the literature and combining the results of multiple studies to produce an overall estimate of the effect size of an intervention or exposure.

Meta-analysis is often conducted alongside a systematic review and can be considered a study of studies . By doing this, researchers provide a more comprehensive and reliable estimate of the overall effect size and their confidence interval (a measure of precision).

Meta-analyses can be conducted for a wide range of research questions , including evaluating the effectiveness of medical interventions, identifying risk factors for disease, or assessing the accuracy of diagnostic tests. They are particularly useful when the results of individual studies are inconsistent or when the sample sizes of individual studies are small, as a meta-analysis can provide a more precise estimate of the true effect size.

When conducting a meta-analysis, researchers must carefully assess the risk of bias in each study to enhance the validity of the meta-analysis. Many aspects of research studies are prone to bias , such as the methodology and the reporting of results. Where studies exhibit a high risk of bias, authors may opt to exclude the study from the analysis or perform a subgroup or sensitivity analysis.

Advantages of a meta-analysis include:

- Combine the results of multiple studies, resulting in a larger sample size and increased statistical power, to provide a more comprehensive and precise estimate of the effect size of an intervention or outcome

- Can help to identify sources of heterogeneity or variability in the results of individual studies by exploring the influence of different study characteristics or subgroups

- Can help to resolve conflicting results or controversies in the literature by providing a more robust estimate of the effect size

Disadvantages of a meta-analysis include:

- Susceptible to publication bias , where studies with statistically significant or positive results are more likely to be published than studies with nonsignificant or negative results. This bias can lead to an overestimation of the treatment effect in a meta-analysis

- May not be appropriate if the studies included are too heterogeneous , as this can make it difficult to draw meaningful conclusions from the pooled results

- Depend on the quality and completeness of the data available from the individual studies and may be limited by the lack of data on certain outcomes or subgroups

Ecological study

An ecological study assesses the relationship between outcome and exposure at a population level or among groups of people rather than studying individuals directly.

The main goal of an ecological study is to observe and analyse patterns or trends at the population level and to identify potential associations or correlations between environmental factors or exposures and health outcomes.

Ecological studies focus on collecting data on population health outcomes , such as disease or mortality rates, and environmental factors or exposures, such as air pollution, temperature, or socioeconomic status.

Example of an ecological study

An ecological study might be used when comparing smoking rates and lung cancer incidence across different countries.

Advantages of an ecological study include:

- Provide insights into how social, economic, and environmental factors may impact health outcomes in real-world settings , which can inform public health policies and interventions

- Cost-effective and efficient, often using existing data or readily available data, such as data from national or regional databases

Disadvantages of an ecological study include:

- Ecological fallacy occurs when conclusions about individual-level associations are drawn from population-level differences

- Ecological studies rely on population-level (i.e. aggregate) rather than individual-level data; they cannot establish causal relationships between exposures and outcomes, as the studies do not account for differences or confounders at the individual level

Randomised controlled trial

A randomised controlled trial (RCT) is an important study design commonly used in medical research to determine the effectiveness of a treatment or intervention . It is considered the gold standard in research design because it allows researchers to draw cause-and-effect conclusions about the effects of an intervention.

In an RCT, participants are randomly assigned to two or more groups. One group receives the intervention being tested, such as a new drug or a specific medical procedure. In contrast, the other group is a control group and receives either no intervention or a placebo .

Randomisation ensures that each participant has an equal chance of being assigned to either group, thereby minimising selection bias . To reduce bias, an RCT often uses a technique called blinding , in which study participants, researchers, or analysts are kept unaware of participant assignment during the study. The participants are then followed over time, and outcome measures are collected and compared to determine if there is any statistical difference between the intervention and control groups.

Example of a randomised controlled trial

An RCT might be employed to evaluate the effectiveness of a new smoking cessation program in helping individuals quit smoking compared to the existing standard of care.

Advantages of an RCT include:

- Considered the most reliable study design for establishing causal relationships between interventions and outcomes and determining the effectiveness of interventions

- Randomisation of participants to intervention and control groups ensures that the groups are similar at the outset, reducing the risk of selection bias and enhancing internal validity

- Using a control group allows researchers to compare with the group that received the intervention while controlling for confounding factors

Disadvantages of an RCT include:

- Can raise ethical concerns ; for example, it may be considered unethical to withhold an intervention from a control group, especially if the intervention is known to be effective

- Can be expensive and time-consuming to conduct, requiring resources for participant recruitment, randomisation, data collection, and analysis

- Often have strict inclusion and exclusion criteria , which may limit the generalisability of the findings to broader populations

- May not always be feasible or practical for certain research questions, especially in rare diseases or when studying long-term outcomes

Dr Chris Jefferies

- Yuliya L, Qazi MA (eds.). Toronto Notes 2022. Toronto: Toronto Notes for Medical Students Inc; 2022.

- Le T, Bhushan V, Qui C, Chalise A, Kaparaliotis P, Coleman C, Kallianos K. First Aid for the USMLE Step 1 2023. New York: McGraw-Hill Education; 2023.

- Rothman KJ, Greenland S, Lash T. Modern Epidemiology. 3 rd ed. Philadelphia: Lippincott Williams & Wilkins; 2008.

Other pages

- Product Bundles 🎉

- Join the Team 🙌

- Institutional Licence 📚

- OSCE Station Creator Tool 🩺

- Create and Share Flashcards 🗂️

- OSCE Group Chat 💬

- Newsletter 📰

- Advertise With Us

Join the community

Educational resources and simple solutions for your research journey

What is Research Design? Understand Types of Research Design, with Examples

Have you been wondering “ what is research design ?” or “what are some research design examples ?” Are you unsure about the research design elements or which of the different types of research design best suit your study? Don’t worry! In this article, we’ve got you covered!

Table of Contents

What is research design?

Have you been wondering “ what is research design ?” or “what are some research design examples ?” Don’t worry! In this article, we’ve got you covered!

A research design is the plan or framework used to conduct a research study. It involves outlining the overall approach and methods that will be used to collect and analyze data in order to answer research questions or test hypotheses. A well-designed research study should have a clear and well-defined research question, a detailed plan for collecting data, and a method for analyzing and interpreting the results. A well-thought-out research design addresses all these features.

Research design elements

Research design elements include the following:

- Clear purpose: The research question or hypothesis must be clearly defined and focused.

- Sampling: This includes decisions about sample size, sampling method, and criteria for inclusion or exclusion. The approach varies for different research design types .

- Data collection: This research design element involves the process of gathering data or information from the study participants or sources. It includes decisions about what data to collect, how to collect it, and the tools or instruments that will be used.

- Data analysis: All research design types require analysis and interpretation of the data collected. This research design element includes decisions about the statistical tests or methods that will be used to analyze the data, as well as any potential confounding variables or biases that may need to be addressed.

- Type of research methodology: This includes decisions about the overall approach for the study.

- Time frame: An important research design element is the time frame, which includes decisions about the duration of the study, the timeline for data collection and analysis, and follow-up periods.

- Ethical considerations: The research design must include decisions about ethical considerations such as informed consent, confidentiality, and participant protection.

- Resources: A good research design takes into account decisions about the budget, staffing, and other resources needed to carry out the study.

The elements of research design should be carefully planned and executed to ensure the validity and reliability of the study findings. Let’s go deeper into the concepts of research design .

Characteristics of research design

Some basic characteristics of research design are common to different research design types . These characteristics of research design are as follows:

- Neutrality : Right from the study assumptions to setting up the study, a neutral stance must be maintained, free of pre-conceived notions. The researcher’s expectations or beliefs should not color the findings or interpretation of the findings. Accordingly, a good research design should address potential sources of bias and confounding factors to be able to yield unbiased and neutral results.

- Reliability : Reliability is one of the characteristics of research design that refers to consistency in measurement over repeated measures and fewer random errors. A reliable research design must allow for results to be consistent, with few errors due to chance.

- Validity : Validity refers to the minimization of nonrandom (systematic) errors. A good research design must employ measurement tools that ensure validity of the results.

- Generalizability: The outcome of the research design should be applicable to a larger population and not just a small sample . A generalized method means the study can be conducted on any part of a population with similar accuracy.

- Flexibility: A research design should allow for changes to be made to the research plan as needed, based on the data collected and the outcomes of the study

A well-planned research design is critical for conducting a scientifically rigorous study that will generate neutral, reliable, valid, and generalizable results. At the same time, it should allow some level of flexibility.

Different types of research design

A research design is essential to systematically investigate, understand, and interpret phenomena of interest. Let’s look at different types of research design and research design examples .

Broadly, research design types can be divided into qualitative and quantitative research.

Qualitative research is subjective and exploratory. It determines relationships between collected data and observations. It is usually carried out through interviews with open-ended questions, observations that are described in words, etc.

Quantitative research is objective and employs statistical approaches. It establishes the cause-and-effect relationship among variables using different statistical and computational methods. This type of research is usually done using surveys and experiments.

Qualitative research vs. Quantitative research

| Deals with subjective aspects, e.g., experiences, beliefs, perspectives, and concepts. | Measures different types of variables and describes frequencies, averages, correlations, etc. |

| Deals with non-numerical data, such as words, images, and observations. | Tests hypotheses about relationships between variables. Results are presented numerically and statistically. |

| In qualitative research design, data are collected via direct observations, interviews, focus groups, and naturally occurring data. Methods for conducting qualitative research are grounded theory, thematic analysis, and discourse analysis.

| Quantitative research design is empirical. Data collection methods involved are experiments, surveys, and observations expressed in numbers. The research design categories under this are descriptive, experimental, correlational, diagnostic, and explanatory. |

| Data analysis involves interpretation and narrative analysis. | Data analysis involves statistical analysis and hypothesis testing. |

| The reasoning used to synthesize data is inductive.

| The reasoning used to synthesize data is deductive.

|

| Typically used in fields such as sociology, linguistics, and anthropology. | Typically used in fields such as economics, ecology, statistics, and medicine. |

| Example: Focus group discussions with women farmers about climate change perception.

| Example: Testing the effectiveness of a new treatment for insomnia. |

Qualitative research design types and qualitative research design examples

The following will familiarize you with the research design categories in qualitative research:

- Grounded theory: This design is used to investigate research questions that have not previously been studied in depth. Also referred to as exploratory design , it creates sequential guidelines, offers strategies for inquiry, and makes data collection and analysis more efficient in qualitative research.

Example: A researcher wants to study how people adopt a certain app. The researcher collects data through interviews and then analyzes the data to look for patterns. These patterns are used to develop a theory about how people adopt that app.

- Thematic analysis: This design is used to compare the data collected in past research to find similar themes in qualitative research.

Example: A researcher examines an interview transcript to identify common themes, say, topics or patterns emerging repeatedly.

- Discourse analysis : This research design deals with language or social contexts used in data gathering in qualitative research.

Example: Identifying ideological frameworks and viewpoints of writers of a series of policies.

Quantitative research design types and quantitative research design examples

Note the following research design categories in quantitative research:

- Descriptive research design : This quantitative research design is applied where the aim is to identify characteristics, frequencies, trends, and categories. It may not often begin with a hypothesis. The basis of this research type is a description of an identified variable. This research design type describes the “what,” “when,” “where,” or “how” of phenomena (but not the “why”).

Example: A study on the different income levels of people who use nutritional supplements regularly.