You are using an outdated browser. Please upgrade your browser to improve your experience.

- Documentation

- Table of contents

1.1. Variable definition

1.2.1. multiple assignment, 1.2.2. overflow and underflow, 1.2.3. object destructuring with multiple assignment, switch / case, classic for loop, enhanced classic java-style for loop, multi-assignment in combination with for loop, for in loop, do/while loop, 1.3.3. exception handling, 1.3.4. try / catch / finally, 1.3.5. multi-catch, 1.3.6. arm try with resources, 1.4. power assertion, 1.5. labeled statements, 2.1.1. object navigation, 2.1.2. expression deconstruction, 2.1.3. gpath for xml navigation, 3.1. number promotion, 3.2.1. assigning a closure to a sam type, 3.2.2. calling a method accepting a sam type with a closure, 3.2.3. closure to arbitrary type coercion, 3.3. map to type coercion, 3.4. string to enum coercion, 3.5. custom type coercion, 3.6. class literals vs variables and the as operator, 4.1. optional parentheses, 4.2. optional semicolons, 4.3. optional return keyword, 4.4. optional public keyword, 5.1. boolean expressions, 5.2. collections and arrays, 5.3. matchers, 5.4. iterators and enumerations, 5.6. strings, 5.7. numbers, 5.8. object references, 5.9. customizing the truth with asboolean() methods, 6.1. optional typing, activating type checking at compile time, skipping sections, 6.2.2. type checking assignments, 6.2.3. list and map constructors, 6.2.4. method resolution, variables vs fields in type inference, collection literal type inference, least upper bound, instanceof inference, flow typing, advanced type inference, return type inference, explicit closure parameters, parameters inferred from single-abstract method types, the @closureparams annotation, @delegatesto, 6.3.1. dynamic vs static, 6.3.2. the @compilestatic annotation, 6.3.3. key benefits, 7.1.1. towards a smarter type checker, 7.1.2. the extensions attribute, 7.1.3. a dsl for type checking, support classes, class nodes, helping the type checker, throwing an error, isxxxexpression, virtual methods, other useful methods, 7.2.1. precompiled type checking extensions, 7.2.2. using @grab in a type checking extension, 7.2.3. sharing or packaging type checking extensions, 7.2.4. global type checking extensions, 7.2.5. type checking extensions and @compilestatic, 7.2.6. mixed mode compilation, 7.2.7. transforming the ast in an extension, 7.2.8. examples.

This chapter covers the semantics of the Groovy programming language.

1. Statements

Variables can be defined using either their type (like String ) or by using the keyword def (or var ) followed by a variable name:

def and var act as a type placeholder, i.e. a replacement for the type name, when you do not want to give an explicit type. It could be that you don’t care about the type at compile time or are relying on type inference (with Groovy’s static nature). It is mandatory for variable definitions to have a type or placeholder. If left out, the type name will be deemed to refer to an existing variable (presumably declared earlier). For scripts, undeclared variables are assumed to come from the Script binding. In other cases, you will get a missing property (dynamic Groovy) or compile time error (static Groovy). If you think of def and var as an alias of Object , you will understand in an instant.

Variable definitions can provide an initial value, in which case it’s like having a declaration and assignment (which we cover next) all in one.

1.2. Variable assignment

You can assign values to variables for later use. Try the following:

Groovy supports multiple assignment, i.e. where multiple variables can be assigned at once, e.g.:

You can provide types as part of the declaration if you wish:

As well as used when declaring variables it also applies to existing variables:

The syntax works for arrays as well as lists, as well as methods that return either of these:

If the left hand side has too many variables, excess ones are filled with null’s:

If the right hand side has too many variables, the extra ones are ignored:

In the section describing Groovy’s operators, the case of the subscript operator has been covered, explaining how you can override the getAt() / putAt() method.

With this technique, we can combine multiple assignments and the subscript operator methods to implement object destructuring .

Consider the following immutable Coordinates class, containing a pair of longitude and latitude doubles, and notice our implementation of the getAt() method:

Now let’s instantiate this class and destructure its longitude and latitude:

1.3. Control structures

1.3.1. conditional structures.

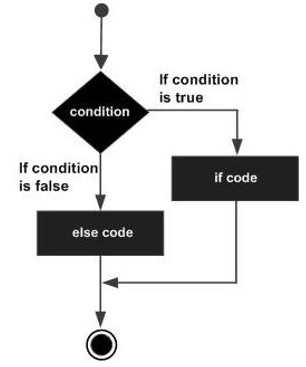

Groovy supports the usual if - else syntax from Java

Groovy also supports the normal Java "nested" if then else if syntax:

The switch statement in Groovy is backwards compatible with Java code; so you can fall through cases sharing the same code for multiple matches.

One difference though is that the Groovy switch statement can handle any kind of switch value and different kinds of matching can be performed.

Switch supports the following kinds of comparisons:

Class case values match if the switch value is an instance of the class

Regular expression case values match if the toString() representation of the switch value matches the regex

Collection case values match if the switch value is contained in the collection. This also includes ranges (since they are Lists)

Closure case values match if the calling the closure returns a result which is true according to the Groovy truth

If none of the above are used then the case value matches if the case value equals the switch value

Groovy also supports switch expressions as shown in the following example:

1.3.2. Looping structures

Groovy supports the standard Java / C for loop:

The more elaborate form of Java’s classic for loop with comma-separate expressions is now supported. Example:

Groovy has supported multi-assignment statements since Groovy 1.6:

These can now appear in for loops:

The for loop in Groovy is much simpler and works with any kind of array, collection, Map, etc.

Groovy supports the usual while {…} loops like Java:

Java’s class do/while loop is now supported. Example:

Exception handling is the same as Java.

You can specify a complete try-catch-finally , a try-catch , or a try-finally set of blocks.

We can put code within a 'finally' clause following a matching 'try' clause, so that regardless of whether the code in the 'try' clause throws an exception, the code in the finally clause will always execute:

With the multi catch block (since Groovy 2.0), we’re able to define several exceptions to be catch and treated by the same catch block:

Groovy often provides better alternatives to Java 7’s try -with-resources statement for Automatic Resource Management (ARM). That syntax is now supported for Java programmers migrating to Groovy and still wanting to use the old style:

Which yields the following output:

Unlike Java with which Groovy shares the assert keyword, the latter in Groovy behaves very differently. First of all, an assertion in Groovy is always executed, independently of the -ea flag of the JVM. It makes this a first class choice for unit tests. The notion of "power asserts" is directly related to how the Groovy assert behaves.

A power assertion is decomposed into 3 parts:

The result of the assertion is very different from what you would get in Java. If the assertion is true, then nothing happens. If the assertion is false, then it provides a visual representation of the value of each sub-expressions of the expression being asserted. For example:

Will yield:

Power asserts become very interesting when the expressions are more complex, like in the next example:

Which will print the value for each sub-expression:

In case you don’t want a pretty printed error message like above, you can fall back to a custom error message by changing the optional message part of the assertion, like in this example:

Which will print the following error message:

Any statement can be associated with a label. Labels do not impact the semantics of the code and can be used to make the code easier to read like in the following example:

Despite not changing the semantics of the labelled statement, it is possible to use labels in the break instruction as a target for jump, as in the next example. However, even if this is allowed, this coding style is in general considered a bad practice:

It is important to understand that by default labels have no impact on the semantics of the code, however they belong to the abstract syntax tree (AST) so it is possible for an AST transformation to use that information to perform transformations over the code, hence leading to different semantics. This is in particular what the Spock Framework does to make testing easier.

2. Expressions

Expressions are the building blocks of Groovy programs that are used to reference existing values and execute code to create new ones.

Groovy supports many of the same kinds of expressions as Java, including:

Groovy also has some of its own special expressions:

Groovy also expands on the normal dot-notation used in Java for member access. Groovy provides special support for accessing hierarchical data structures by specifying the path in the hierarchy of some data of interest. These Groovy path expressions are known as GPath expressions.

2.1. GPath expressions

GPath is a path expression language integrated into Groovy which allows parts of nested structured data to be identified. In this sense, it has similar aims and scope as XPath does for XML. GPath is often used in the context of processing XML, but it really applies to any object graph. Where XPath uses a filesystem-like path notation, a tree hierarchy with parts separated by a slash / , GPath use a dot-object notation to perform object navigation.

As an example, you can specify a path to an object or element of interest:

a.b.c → for XML, yields all the c elements inside b inside a

a.b.c → for POJOs, yields the c properties for all the b properties of a (sort of like a.getB().getC() in JavaBeans)

In both cases, the GPath expression can be viewed as a query on an object graph. For POJOs, the object graph is most often built by the program being written through object instantiation and composition; for XML processing, the object graph is the result of parsing the XML text, most often with classes like XmlParser or XmlSlurper. See Processing XML for more in-depth details on consuming XML in Groovy.

Let’s see an example of a GPath expression on a simple object graph , the one obtained using java reflection. Suppose you are in a non-static method of a class having another method named aMethodFoo

the following GPath expression will get the name of that method:

More precisely , the above GPath expression produces a list of String, each being the name of an existing method on this where that name ends with Foo .

Now, given the following methods also defined in that class:

then the following GPath expression will get the names of (1) and (3) , but not (2) or (0) :

We can decompose the expression this.class.methods.name.grep(~/.*Bar/) to get an idea of how a GPath is evaluated:

property accessor, equivalent to this.getClass() in Java, yields a Class object.

property accessor, equivalent to this.getClass().getMethods() , yields an array of Method objects.

apply a property accessor on each element of an array and produce a list of the results.

call method grep on each element of the list yielded by this.class.methods.name and produce a list of the results.

One powerful feature of GPath expression is that property access on a collection is converted to a property access on each element of the collection with the results collected into a collection. Therefore, the expression this.class.methods.name could be expressed as follows in Java:

Array access notation can also be used in a GPath expression where a collection is present :

Here is an example with an XML document and various form of GPath expressions:

Further details about GPath expressions for XML are in the XML User Guide .

3. Promotion and coercion

The rules of number promotion are specified in the section on math operations .

3.2. Closure to type coercion

A SAM type is a type which defines a single abstract method. This includes:

Any closure can be converted into a SAM type using the as operator:

However, the as Type expression is optional since Groovy 2.2.0. You can omit it and simply write:

which means you are also allowed to use method pointers, as shown in the following example:

The second and probably more important use case for closure to SAM type coercion is calling a method which accepts a SAM type. Imagine the following method:

Then you can call it with a closure, without having to create an explicit implementation of the interface:

But since Groovy 2.2.0, you are also able to omit the explicit coercion and call the method as if it used a closure:

As you can see, this has the advantage of letting you use the closure syntax for method calls, that is to say put the closure outside the parenthesis, improving the readability of your code.

In addition to SAM types, a closure can be coerced to any type and in particular interfaces. Let’s define the following interface:

You can coerce a closure into the interface using the as keyword:

This produces a class for which all methods are implemented using the closure:

But it is also possible to coerce a closure to any class. For example, we can replace the interface that we defined with class without changing the assertions:

Usually using a single closure to implement an interface or a class with multiple methods is not the way to go. As an alternative, Groovy allows you to coerce a map into an interface or a class. In that case, keys of the map are interpreted as method names, while the values are the method implementation. The following example illustrates the coercion of a map into an Iterator :

Of course this is a rather contrived example, but illustrates the concept. You only need to implement those methods that are actually called, but if a method is called that doesn’t exist in the map a MissingMethodException or an UnsupportedOperationException is thrown, depending on the arguments passed to the call, as in the following example:

The type of the exception depends on the call itself:

MissingMethodException if the arguments of the call do not match those from the interface/class

UnsupportedOperationException if the arguments of the call match one of the overloaded methods of the interface/class

Groovy allows transparent String (or GString ) to enum values coercion. Imagine you define the following enum:

then you can assign a string to the enum without having to use an explicit as coercion:

It is also possible to use a GString as the value:

However, this would throw a runtime error ( IllegalArgumentException ):

Note that it is also possible to use implicit coercion in switch statements:

in particular, see how the case use string constants. But if you call a method that uses an enum with a String argument, you still have to use an explicit as coercion:

It is possible for a class to define custom coercion strategies by implementing the asType method. Custom coercion is invoked using the as operator and is never implicit. As an example, imagine you defined two classes, Polar and Cartesian , like in the following example:

And that you want to convert from polar coordinates to cartesian coordinates. One way of doing this is to define the asType method in the Polar class:

which allows you to use the as coercion operator:

Putting it all together, the Polar class looks like this:

but it is also possible to define asType outside of the Polar class, which can be practical if you want to define custom coercion strategies for "closed" classes or classes for which you don’t own the source code, for example using a metaclass:

Using the as keyword is only possible if you have a static reference to a class, like in the following code:

But what if you get the class by reflection, for example by calling Class.forName ?

Trying to use the reference to the class with the as keyword would fail:

It is failing because the as keyword only works with class literals. Instead, you need to call the asType method:

4. Optionality

Method calls can omit the parentheses if there is at least one parameter and there is no ambiguity:

Parentheses are required for method calls without parameters or ambiguous method calls:

In Groovy semicolons at the end of the line can be omitted, if the line contains only a single statement.

This means that:

can be more idiomatically written as:

Multiple statements in a line require semicolons to separate them:

In Groovy, the last expression evaluated in the body of a method or a closure is returned. This means that the return keyword is optional.

Can be shortened to:

By default, Groovy classes and methods are public . Therefore this class:

is identical to this class:

5. The Groovy Truth

Groovy decides whether an expression is true or false by applying the rules given below.

True if the corresponding Boolean value is true .

Non-empty Collections and arrays are true.

True if the Matcher has at least one match.

Iterators and Enumerations with further elements are coerced to true.

Non-empty Maps are evaluated to true.

Non-empty Strings, GStrings and CharSequences are coerced to true.

Non-zero numbers are true.

Non-null object references are coerced to true.

In order to customize whether groovy evaluates your object to true or false implement the asBoolean() method:

Groovy will call this method to coerce your object to a boolean value, e.g.:

Optional typing is the idea that a program can work even if you don’t put an explicit type on a variable. Being a dynamic language, Groovy naturally implements that feature, for example when you declare a variable:

Groovy will let you write this instead:

So it doesn’t matter that you use an explicit type here. It is in particular interesting when you combine this feature with static type checking , because the type checker performs type inference.

Likewise, Groovy doesn’t make it mandatory to declare the types of a parameter in a method:

can be rewritten using def as both return type and parameter types, in order to take advantage of duck typing, as illustrated in this example:

Eventually, the type can be removed altogether from both the return type and the descriptor. But if you want to remove it from the return type, you then need to add an explicit modifier for the method, so that the compiler can make a difference between a method declaration and a method call, like illustrated in this example:

6.2. Static type checking

By default, Groovy performs minimal type checking at compile time. Since it is primarily a dynamic language, most checks that a static compiler would normally do aren’t possible at compile time. A method added via runtime metaprogramming might alter a class or object’s runtime behavior. Let’s illustrate why in the following example:

It is quite common in dynamic languages for code such as the above example not to throw any error. How can this be? In Java, this would typically fail at compile time. However, in Groovy, it will not fail at compile time, and if coded correctly, will also not fail at runtime. In fact, to make this work at runtime, one possibility is to rely on runtime metaprogramming. So just adding this line after the declaration of the Person class is enough:

This means that in general, in Groovy, you can’t make any assumption about the type of an object beyond its declaration type, and even if you know it, you can’t determine at compile time what method will be called, or which property will be retrieved. It has a lot of interest, going from writing DSLs to testing, which is discussed in other sections of this manual.

However, if your program doesn’t rely on dynamic features and that you come from the static world (in particular, from a Java mindset), not catching such "errors" at compile time can be surprising. As we have seen in the previous example, the compiler cannot be sure this is an error. To make it aware that it is, you have to explicitly instruct the compiler that you are switching to a type checked mode. This can be done by annotating a class or a method with @groovy.transform.TypeChecked .

When type checking is activated, the compiler performs much more work:

type inference is activated, meaning that even if you use def on a local variable for example, the type checker will be able to infer the type of the variable from the assignments

method calls are resolved at compile time, meaning that if a method is not declared on a class, the compiler will throw an error

in general, all the compile time errors that you are used to find in a static language will appear: method not found, property not found, incompatible types for method calls, number precision errors, …

In this section, we will describe the behavior of the type checker in various situations and explain the limits of using @TypeChecked on your code.

6.2.1. The @TypeChecked annotation

The groovy.transform.TypeChecked annotation enables type checking. It can be placed on a class:

Or on a method:

In the first case, all methods, properties, fields, inner classes, … of the annotated class will be type checked, whereas in the second case, only the method and potential closures or anonymous inner classes that it contains will be type checked.

The scope of type checking can be restricted. For example, if a class is type checked, you can instruct the type checker to skip a method by annotating it with @TypeChecked(TypeCheckingMode.SKIP) :

In the previous example, SentenceBuilder relies on dynamic code. There’s no real Hello method or property, so the type checker would normally complain and compilation would fail. Since the method that uses the builder is marked with TypeCheckingMode.SKIP , type checking is skipped for this method, so the code will compile, even if the rest of the class is type checked.

The following sections describe the semantics of type checking in Groovy.

An object o of type A can be assigned to a variable of type T if and only if:

or T is one of String , boolean , Boolean or Class

or o is null and T is not a primitive type

or T is an array and A is an array and the component type of A is assignable to the component type of T

or T is an array and A is a collection or stream and the component type of A is assignable to the component type of T

or T is a superclass of A

or T is an interface implemented by A

or T or A are a primitive type and their boxed types are assignable

or T extends groovy.lang.Closure and A is a SAM-type (single abstract method type)

or T and A derive from java.lang.Number and conform to the following table

In addition to the assignment rules above, if an assignment is deemed invalid, in type checked mode, a list literal or a map literal A can be assigned to a variable of type T if:

the assignment is a variable declaration and A is a list literal and T has a constructor whose parameters match the types of the elements in the list literal

the assignment is a variable declaration and A is a map literal and T has a no-arg constructor and a property for each of the map keys

For example, instead of writing:

You can use a "list constructor":

or a "map constructor":

If you use a map constructor, additional checks are done on the keys of the map to check if a property of the same name is defined. For example, the following will fail at compile time:

In type checked mode, methods are resolved at compile time. Resolution works by name and arguments. The return type is irrelevant to method selection. Types of arguments are matched against the types of the parameters following those rules:

An argument o of type A can be used for a parameter of type T if and only if:

or T is a String and A is a GString

or T and A derive from java.lang.Number and conform to the same rules as assignment of numbers

If a method with the appropriate name and arguments is not found at compile time, an error is thrown. The difference with "normal" Groovy is illustrated in the following example:

The example above shows a class that Groovy will be able to compile. However, if you try to create an instance of MyService and call the doSomething method, then it will fail at runtime , because printLine doesn’t exist. Of course, we already showed how Groovy could make this a perfectly valid call, for example by catching MethodMissingException or implementing a custom metaclass, but if you know you’re not in such a case, @TypeChecked comes handy:

Just adding @TypeChecked will trigger compile time method resolution. The type checker will try to find a method printLine accepting a String on the MyService class, but cannot find one. It will fail compilation with the following message:

Cannot find matching method MyService#printLine(java.lang.String)

There are possible workarounds, like introducing an interface, but basically, by activating type checking, you gain type safety but you loose some features of the language. Hopefully, Groovy introduces some features like flow typing to reduce the gap between type-checked and non type-checked Groovy.

6.2.5. Type inference

When code is annotated with @TypeChecked , the compiler performs type inference. It doesn’t simply rely on static types, but also uses various techniques to infer the types of variables, return types, literals, … so that the code remains as clean as possible even if you activate the type checker.

The simplest example is inferring the type of a variable:

The reason the call to toUpperCase works is because the type of message was inferred as being a String .

It is worth noting that although the compiler performs type inference on local variables, it does not perform any kind of type inference on fields, always falling back to the declared type of a field. To illustrate this, let’s take a look at this example:

Why such a difference? The reason is thread safety . At compile time, we can’t make any guarantee about the type of a field. Any thread can access any field at any time and between the moment a field is assigned a variable of some type in a method and the time is used the line after, another thread may have changed the contents of the field. This is not the case for local variables: we know if they "escape" or not, so we can make sure that the type of a variable is constant (or not) over time. Note that even if a field is final, the JVM makes no guarantee about it, so the type checker doesn’t behave differently if a field is final or not.

Groovy provides a syntax for various type literals. There are three native collection literals in Groovy:

lists, using the [] literal

maps, using the [:] literal

ranges, using from..to (inclusive), from..<to (right exclusive), from<..to (left exclusive) and from<..<to (full exclusive)

The inferred type of a literal depends on the elements of the literal, as illustrated in the following table:

As you can see, with the noticeable exception of the IntRange , the inferred type makes use of generics types to describe the contents of a collection. In case the collection contains elements of different types, the type checker still performs type inference of the components, but uses the notion of least upper bound .

In Groovy, the least upper bound of two types A and B is defined as a type which:

superclass corresponds to the common super class of A and B

interfaces correspond to the interfaces implemented by both A and B

if A or B is a primitive type and that A isn’t equal to B , the least upper bound of A and B is the least upper bound of their wrapper types

If A and B only have one (1) interface in common and that their common superclass is Object , then the LUB of both is the common interface.

The least upper bound represents the minimal type to which both A and B can be assigned. So for example, if A and B are both String , then the LUB (least upper bound) of both is also String .

In those examples, the LUB is always representable as a normal, JVM supported, type. But Groovy internally represents the LUB as a type which can be more complex, and that you wouldn’t be able to use to define a variable for example. To illustrate this, let’s continue with this example:

What is the least upper bound of Bottom and SerializableFooImpl ? They don’t have a common super class (apart from Object ), but they do share 2 interfaces ( Serializable and Foo ), so their least upper bound is a type which represents the union of two interfaces ( Serializable and Foo ). This type cannot be defined in the source code, yet Groovy knows about it.

In the context of collection type inference (and generic type inference in general), this becomes handy, because the type of the components is inferred as the least upper bound. We can illustrate why this is important in the following example:

The error message will look like:

which indicates that the exit method is neither defines on Greeter nor Salute , which are the two interfaces defined in the least upper bound of A and B .

In normal, non type checked, Groovy, you can write things like:

The method call works because of dynamic dispatch (the method is selected at runtime). The equivalent code in Java would require to cast o to a Greeter before calling the greeting method, because methods are selected at compile time:

However, in Groovy, even if you add @TypeChecked (and thus activate type checking) on the doSomething method, the cast is not necessary. The compiler embeds instanceof inference that makes the cast optional.

Flow typing is an important concept of Groovy in type checked mode and an extension of type inference. The idea is that the compiler is capable of inferring the type of variables in the flow of the code, not just at initialization:

So the type checker is aware of the fact that the concrete type of a variable is different over time. In particular, if you replace the last assignment with:

The type checker will now fail at compile time, because it knows that o is a double when toUpperCase is called, so it’s a type error.

It is important to understand that it is not the fact of declaring a variable with def that triggers type inference. Flow typing works for any variable of any type. Declaring a variable with an explicit type only constrains what you can assign to the variable:

You can also note that even if the variable is declared without generics information, the type checker knows what is the component type. Therefore, such code would fail compilation:

Fixing this requires adding an explicit generic type to the declaration:

Flow typing has been introduced to reduce the difference in semantics between classic and static Groovy. In particular, consider the behavior of this code in Java:

In Java, this code will output Nope , because method selection is done at compile time and based on the declared types. So even if o is a String at runtime, it is still the Object version which is called, because o has been declared as an Object . To be short, in Java, declared types are most important, be it variable types, parameter types or return types.

In Groovy, we could write:

But this time, it will return 6 , because the method which is chosen at runtime , based on the actual argument types. So at runtime, o is a String so the String variant is used. Note that this behavior has nothing to do with type checking, it’s the way Groovy works in general: dynamic dispatch.

In type checked Groovy, we want to make sure the type checker selects the same method at compile time , that the runtime would choose. It is not possible in general, due to the semantics of the language, but we can make things better with flow typing. With flow typing, o is inferred as a String when the compute method is called, so the version which takes a String and returns an int is chosen. This means that we can infer the return type of the method to be an int , and not a String . This is important for subsequent calls and type safety.

So in type checked Groovy, flow typing is a very important concept, which also implies that if @TypeChecked is applied, methods are selected based on the inferred types of the arguments, not on the declared types. This doesn’t ensure 100% type safety, because the type checker may select a wrong method, but it ensures the closest semantics to dynamic Groovy.

A combination of flow typing and least upper bound inference is used to perform advanced type inference and ensure type safety in multiple situations. In particular, program control structures are likely to alter the inferred type of a variable:

When the type checker visits an if/else control structure, it checks all variables which are assigned in if/else branches and computes the least upper bound of all assignments. This type is the type of the inferred variable after the if/else block, so in this example, o is assigned a Top in the if branch and a Bottom in the else branch. The LUB of those is a Top , so after the conditional branches, the compiler infers o as being a Top . Calling methodFromTop will therefore be allowed, but not methodFromBottom .

The same reasoning exists with closures and in particular closure shared variables. A closure shared variable is a variable which is defined outside of a closure, but used inside a closure, as in this example:

Groovy allows developers to use those variables without requiring them to be final. This means that a closure shared variable can be reassigned inside a closure:

The problem is that a closure is an independent block of code that can be executed (or not) at any time. In particular, doSomething may be asynchronous, for example. This means that the body of a closure doesn’t belong to the main control flow. For that reason, the type checker also computes, for each closure shared variable, the LUB of all assignments of the variable, and will use that LUB as the inferred type outside of the scope of the closure, like in this example:

Here, it is clear that when methodFromBottom is called, there’s no guarantee, at compile-time or runtime that the type of o will effectively be a Bottom . There are chances that it will be, but we can’t make sure, because it’s asynchronous. So the type checker will only allow calls on the least upper bound , which is here a Top .

6.2.6. Closures and type inference

The type checker performs special inference on closures, resulting on additional checks on one side and improved fluency on the other side.

The first thing that the type checker is capable of doing is inferring the return type of a closure. This is simply illustrated in the following example:

As you can see, unlike a method which declares its return type explicitly, there’s no need to declare the return type of a closure: its type is inferred from the body of the closure.

It’s worth noting that return type inference is only applicable to closures. While the type checker could do the same on a method, it is in practice not desirable: in general , methods can be overridden and it is not statically possible to make sure that the method which is called is not an overridden version. So flow typing would actually think that a method returns something, while in reality, it could return something else, like illustrated in the following example:

As you can see, if the type checker relied on the inferred return type of a method, with flow typing , the type checker could determine that it is ok to call toUpperCase . It is in fact an error , because a subclass can override compute and return a different object. Here, B#compute returns an int , so someone calling computeFully on an instance of B would see a runtime error. The compiler prevents this from happening by using the declared return type of methods instead of the inferred return type.

For consistency, this behavior is the same for every method, even if they are static or final.

Parameter type inference

In addition to the return type, it is possible for a closure to infer its parameter types from the context. There are two ways for the compiler to infer the parameter types:

through implicit SAM type coercion

through API metadata

To illustrate this, lets start with an example that will fail compilation due to the inability for the type checker to infer the parameter types:

In this example, the closure body contains it.age . With dynamic, not type checked code, this would work, because the type of it would be a Person at runtime. Unfortunately, at compile-time, there’s no way to know what is the type of it , just by reading the signature of inviteIf .

To be short, the type checker doesn’t have enough contextual information on the inviteIf method to determine statically the type of it . This means that the method call needs to be rewritten like this:

By explicitly declaring the type of the it variable, you can work around the problem and make this code statically checked.

For an API or framework designer, there are two ways to make this more elegant for users, so that they don’t have to declare an explicit type for the closure parameters. The first one, and easiest, is to replace the closure with a SAM type:

The original issue that needs to be solved when it comes to closure parameter type inference, that is to say, statically determining the types of the arguments of a closure without having to have them explicitly declared, is that the Groovy type system inherits the Java type system, which is insufficient to describe the types of the arguments.

Groovy provides an annotation, @ClosureParams which is aimed at completing type information. This annotation is primarily aimed at framework and API developers who want to extend the capabilities of the type checker by providing type inference metadata. This is important if your library makes use of closures and that you want the maximum level of tooling support too.

Let’s illustrate this by fixing the original example, introducing the @ClosureParams annotation:

The @ClosureParams annotation minimally accepts one argument, which is named a type hint . A type hint is a class which is responsible for completing type information at compile time for the closure. In this example, the type hint being used is groovy.transform.stc.FirstParam which indicated to the type checker that the closure will accept one parameter whose type is the type of the first parameter of the method. In this case, the first parameter of the method is Person , so it indicates to the type checker that the first parameter of the closure is in fact a Person .

A second optional argument is named options . Its semantics depend on the type hint class. Groovy comes with various bundled type hints, illustrated in the table below:

In short, the lack of the @ClosureParams annotation on a method accepting a Closure will not fail compilation. If present (and it can be present in Java sources as well as Groovy sources), then the type checker has more information and can perform additional type inference. This makes this feature particularly interesting for framework developers.

A third optional argument is named conflictResolutionStrategy . It can reference a class (extending from ClosureSignatureConflictResolver ) that can perform additional resolution of parameter types if more than one are found after initial inference calculations are complete. Groovy comes with a default type resolver which does nothing, and another which selects the first signature if multiple are found. The resolver is only invoked if more than one signature is found and is by design a post processor. Any statements which need injected typing information must pass one of the parameter signatures determined through type hints. The resolver then picks among the returned candidate signatures.

The @DelegatesTo annotation is used by the type checker to infer the type of the delegate. It allows the API designer to instruct the compiler what is the type of the delegate and the delegation strategy. The @DelegatesTo annotation is discussed in a specific section .

6.3. Static compilation

In the type checking section , we have seen that Groovy provides optional type checking thanks to the @TypeChecked annotation. The type checker runs at compile time and performs a static analysis of dynamic code. The program will behave exactly the same whether type checking has been enabled or not. This means that the @TypeChecked annotation is neutral in regard to the semantics of a program. Even though it may be necessary to add type information in the sources so that the program is considered type safe, in the end, the semantics of the program are the same.

While this may sound fine, there is actually one issue with this: type checking of dynamic code, done at compile time, is by definition only correct if no runtime specific behavior occurs. For example, the following program passes type checking:

There are two compute methods. One accepts a String and returns an int , the other accepts an int and returns a String . If you compile this, it is considered type safe: the inner compute('foobar') call will return an int , and calling compute on this int will in turn return a String .

Now, before calling test() , consider adding the following line:

Using runtime metaprogramming, we’re actually modifying the behavior of the compute(String) method, so that instead of returning the length of the provided argument, it will return a Date . If you execute the program, it will fail at runtime. Since this line can be added from anywhere, in any thread, there’s absolutely no way for the type checker to statically make sure that no such thing happens. In short, the type checker is vulnerable to monkey patching. This is just one example, but this illustrates the concept that doing static analysis of a dynamic program is inherently wrong.

The Groovy language provides an alternative annotation to @TypeChecked which will actually make sure that the methods which are inferred as being called will effectively be called at runtime. This annotation turns the Groovy compiler into a static compiler , where all method calls are resolved at compile time and the generated bytecode makes sure that this happens: the annotation is @groovy.transform.CompileStatic .

The @CompileStatic annotation can be added anywhere the @TypeChecked annotation can be used, that is to say on a class or a method. It is not necessary to add both @TypeChecked and @CompileStatic , as @CompileStatic performs everything @TypeChecked does, but in addition triggers static compilation.

Let’s take the example which failed , but this time let’s replace the @TypeChecked annotation with @CompileStatic :

This is the only difference. If we execute this program, this time, there is no runtime error. The test method became immune to monkey patching, because the compute methods which are called in its body are linked at compile time, so even if the metaclass of Computer changes, the program still behaves as expected by the type checker .

There are several benefits of using @CompileStatic on your code:

type safety

immunity to monkey patching

performance improvements

The performance improvements depend on the kind of program you are executing. If it is I/O bound, the difference between statically compiled code and dynamic code is barely noticeable. On highly CPU intensive code, since the bytecode which is generated is very close, if not equal, to the one that Java would produce for an equivalent program, the performance is greatly improved.

7. Type checking extensions

7.1. writing a type checking extension.

Despite being a dynamic language, Groovy can be used with a static type checker at compile time, enabled using the @TypeChecked annotation. In this mode, the compiler becomes more verbose and throws errors for, example, typos, non-existent methods, etc. This comes with a few limitations though, most of them coming from the fact that Groovy remains inherently a dynamic language. For example, you wouldn’t be able to use type checking on code that uses the markup builder:

In the previous example, none of the html , head , body or p methods exist. However if you execute the code, it works because Groovy uses dynamic dispatch and converts those method calls at runtime. In this builder, there’s no limitation about the number of tags that you can use, nor the attributes, which means there is no chance for a type checker to know about all the possible methods (tags) at compile time, unless you create a builder dedicated to HTML for example.

Groovy is a platform of choice when it comes to implement internal DSLs. The flexible syntax, combined with runtime and compile-time metaprogramming capabilities make Groovy an interesting choice because it allows the programmer to focus on the DSL rather than on tooling or implementation. Since Groovy DSLs are Groovy code, it’s easy to have IDE support without having to write a dedicated plugin for example.

In a lot of cases, DSL engines are written in Groovy (or Java) then user code is executed as scripts, meaning that you have some kind of wrapper on top of user logic. The wrapper may consist, for example, in a GroovyShell or GroovyScriptEngine that performs some tasks transparently before running the script (adding imports, applying AST transforms, extending a base script,…). Often, user written scripts come to production without testing because the DSL logic comes to a point where any user may write code using the DSL syntax. In the end, a user may just ignore that what they write is actually code . This adds some challenges for the DSL implementer, such as securing execution of user code or, in this case, early reporting of errors.

For example, imagine a DSL which goal is to drive a rover on Mars remotely. Sending a message to the rover takes around 15 minutes. If the rover executes the script and fails with an error (say a typo), you have two problems:

first, feedback comes only after 30 minutes (the time needed for the rover to get the script and the time needed to receive the error)

second, some portion of the script has been executed and you may have to change the fixed script significantly (implying that you need to know the current state of the rover…)

Type checking extensions is a mechanism that will allow the developer of a DSL engine to make those scripts safer by applying the same kind of checks that static type checking allows on regular groovy classes.

The principle, here, is to fail early, that is to say fail compilation of scripts as soon as possible, and if possible provide feedback to the user (including nice error messages).

In short, the idea behind type checking extensions is to make the compiler aware of all the runtime metaprogramming tricks that the DSL uses, so that scripts can benefit the same level of compile-time checks as a verbose statically compiled code would have. We will see that you can go even further by performing checks that a normal type checker wouldn’t do, delivering powerful compile-time checks for your users.

The @TypeChecked annotation supports an attribute named extensions . This parameter takes an array of strings corresponding to a list of type checking extensions scripts . Those scripts are found at compile time on classpath. For example, you would write:

In that case, the foo methods would be type checked with the rules of the normal type checker completed by those found in the myextension.groovy script. Note that while internally the type checker supports multiple mechanisms to implement type checking extensions (including plain old java code), the recommended way is to use those type checking extension scripts.

The idea behind type checking extensions is to use a DSL to extend the type checker capabilities. This DSL allows you to hook into the compilation process, more specifically the type checking phase, using an "event-driven" API. For example, when the type checker enters a method body, it throws a beforeVisitMethod event that the extension can react to:

Imagine that you have this rover DSL at hand. A user would write:

If you have a class defined as such:

The script can be type checked before being executed using the following script:

Using the compiler configuration above, we can apply @TypeChecked transparently to the script. In that case, it will fail at compile time:

Now, we will slightly update the configuration to include the ``extensions'' parameter:

Then add the following to your classpath:

Here, we’re telling the compiler that if an unresolved variable is found and that the name of the variable is robot , then we can make sure that the type of this variable is Robot .

7.1.4. Type checking extensions API

The type checking API is a low level API, dealing with the Abstract Syntax Tree. You will have to know your AST well to develop extensions, even if the DSL makes it much easier than just dealing with AST code from plain Java or Groovy.

The type checker sends the following events, to which an extension script can react:

Of course, an extension script may consist of several blocks, and you can have multiple blocks responding to the same event. This makes the DSL look nicer and easier to write. However, reacting to events is far from sufficient. If you know you can react to events, you also need to deal with the errors, which implies several helper methods that will make things easier.

7.1.5. Working with extensions

The DSL relies on a support class called org.codehaus.groovy.transform.stc.GroovyTypeCheckingExtensionSupport . This class itself extends org.codehaus.groovy.transform.stc.TypeCheckingExtension . Those two classes define a number of helper methods that will make working with the AST easier, especially regarding type checking. One interesting thing to know is that you have access to the type checker . This means that you can programmatically call methods of the type checker, including those that allow you to throw compilation errors .

The extension script delegates to the org.codehaus.groovy.transform.stc.GroovyTypeCheckingExtensionSupport class, meaning that you have direct access to the following variables:

context : the type checker context, of type org.codehaus.groovy.transform.stc.TypeCheckingContext

typeCheckingVisitor : the type checker itself, a org.codehaus.groovy.transform.stc.StaticTypeCheckingVisitor instance

generatedMethods : a list of "generated methods", which is in fact the list of "dummy" methods that you can create inside a type checking extension using the newMethod calls

The type checking context contains a lot of information that is useful in context for the type checker. For example, the current stack of enclosing method calls, binary expressions, closures, … This information is in particular important if you have to know where you are when an error occurs and that you want to handle it.

In addition to facilities provided by GroovyTypeCheckingExtensionSupport and StaticTypeCheckingVisitor , a type-checking DSL script imports static members from org.codehaus.groovy.ast.ClassHelper and org.codehaus.groovy.transform.stc.StaticTypeCheckingSupport granting access to common types via OBJECT_TYPE , STRING_TYPE , THROWABLE_TYPE , etc. and checks like missesGenericsTypes(ClassNode) , isClassClassNodeWrappingConcreteType(ClassNode) and so on.

Handling class nodes is something that needs particular attention when you work with a type checking extension. Compilation works with an abstract syntax tree (AST) and the tree may not be complete when you are type checking a class. This also means that when you refer to types, you must not use class literals such as String or HashSet , but to class nodes representing those types. This requires a certain level of abstraction and understanding how Groovy deals with class nodes. To make things easier, Groovy supplies several helper methods to deal with class nodes. For example, if you want to say "the type for String", you can write:

You would also note that there is a variant of classNodeFor that takes a String as an argument, instead of a Class . In general, you should not use that one, because it would create a class node for which the name is String , but without any method, any property, … defined on it. The first version returns a class node that is resolved but the second one returns one that is not . So the latter should be reserved for very special cases.

The second problem that you might encounter is referencing a type which is not yet compiled. This may happen more often than you think. For example, when you compile a set of files together. In that case, if you want to say "that variable is of type Foo" but Foo is not yet compiled, you can still refer to the Foo class node using lookupClassNodeFor :

Say that you know that variable foo is of type Foo and you want to tell the type checker about it. Then you can use the storeType method, which takes two arguments: the first one is the node for which you want to store the type and the second one is the type of the node. If you look at the implementation of storeType , you would see that it delegates to the type checker equivalent method, which itself does a lot of work to store node metadata. You would also see that storing the type is not limited to variables: you can set the type of any expression.

Likewise, getting the type of an AST node is just a matter of calling getType on that node. This would in general be what you want, but there’s something that you must understand:

getType returns the inferred type of an expression. This means that it will not return, for a variable declared of type Object the class node for Object , but the inferred type of this variable at this point of the code (flow typing)

if you want to access the origin type of a variable (or field/parameter), then you must call the appropriate method on the AST node

To throw a type checking error, you only have to call the addStaticTypeError method which takes two arguments:

a message which is a string that will be displayed to the end user

an AST node responsible for the error. It’s better to provide the best suiting AST node because it will be used to retrieve the line and column numbers

It is often required to know the type of an AST node. For readability, the DSL provides a special isXXXExpression method that will delegate to x instance of XXXExpression . For example, instead of writing:

you can just write:

When you perform type checking of dynamic code, you may often face the case when you know that a method call is valid but there is no "real" method behind it. As an example, take the Grails dynamic finders. You can have a method call consisting of a method named findByName(…) . As there’s no findByName method defined in the bean, the type checker would complain. Yet, you would know that this method wouldn’t fail at runtime, and you can even tell what is the return type of this method. For this case, the DSL supports two special constructs that consist of phantom methods . This means that you will return a method node that doesn’t really exist but is defined in the context of type checking. Three methods exist:

newMethod(String name, Class returnType)

newMethod(String name, ClassNode returnType)

newMethod(String name, Callable<ClassNode> return Type)

All three variants do the same: they create a new method node which name is the supplied name and define the return type of this method. Moreover, the type checker would add those methods in the generatedMethods list (see isGenerated below). The reason why we only set a name and a return type is that it is only what you need in 90% of the cases. For example, in the findByName example upper, the only thing you need to know is that findByName wouldn’t fail at runtime, and that it returns a domain class. The Callable version of return type is interesting because it defers the computation of the return type when the type checker actually needs it. This is interesting because in some circumstances, you may not know the actual return type when the type checker demands it, so you can use a closure that will be called each time getReturnType is called by the type checker on this method node. If you combine this with deferred checks, you can achieve pretty complex type checking including handling of forward references.

Should you need more than the name and return type, you can always create a new MethodNode by yourself.

Scoping is very important in DSL type checking and is one of the reasons why we couldn’t use a pointcut based approach to DSL type checking. Basically, you must be able to define very precisely when your extension applies and when it does not. Moreover, you must be able to handle situations that a regular type checker would not be able to handle, such as forward references:

Say for example that you want to handle a builder:

Your extension, then, should only be active once you’ve entered the foo method, and inactive outside this scope. But you could have complex situations like multiple builders in the same file or embedded builders (builders in builders). While you should not try to fix all this from start (you must accept limitations to type checking), the type checker does offer a nice mechanism to handle this: a scoping stack, using the newScope and scopeExit methods.

newScope creates a new scope and puts it on top of the stack

scopeExits pops a scope from the stack

A scope consists of:

a parent scope

a map of custom data

If you want to look at the implementation, it’s simply a LinkedHashMap ( org.codehaus.groovy.transform.stc.GroovyTypeCheckingExtensionSupport.TypeCheckingScope ), but it’s quite powerful. For example, you can use such a scope to store a list of closures to be executed when you exit the scope. This is how you would handle forward references:

That is to say, that if at some point you are not able to determine the type of an expression, or that you are not able to check at this point that an assignment is valid or not, you can still make the check later… This is a very powerful feature. Now, newScope and scopeExit provide some interesting syntactic sugar:

At anytime in the DSL, you can access the current scope using getCurrentScope() or more simply currentScope :

The general schema would then be:

determine a pointcut where you push a new scope on stack and initialize custom variables within this scope

using the various events, you can use the information stored in your custom scope to perform checks, defer checks,…

determine a pointcut where you exit the scope, call scopeExit and eventually perform additional checks

For the complete list of helper methods, please refer to the org.codehaus.groovy.transform.stc.GroovyTypeCheckingExtensionSupport and org.codehaus.groovy.transform.stc.TypeCheckingExtension classes. However, take special attention to those methods:

isDynamic : takes a VariableExpression as argument and returns true if the variable is a DynamicExpression, which means, in a script, that it wasn’t defined using a type or def .

isGenerated : takes a MethodNode as an argument and tells if the method is one that was generated by the type checker extension using the newMethod method

isAnnotatedBy : takes an AST node and a Class (or ClassNode), and tells if the node is annotated with this class. For example: isAnnotatedBy(node, NotNull)

getTargetMethod : takes a method call as argument and returns the MethodNode that the type checker has determined for it

delegatesTo : emulates the behaviour of the @DelegatesTo annotation. It allows you to tell that the argument will delegate to a specific type (you can also specify the delegation strategy)

7.2. Advanced type checking extensions

All the examples above use type checking scripts. They are found in source form in classpath, meaning that:

a Groovy source file, corresponding to the type checking extension, is available on compilation classpath

this file is compiled by the Groovy compiler for each source unit being compiled (often, a source unit corresponds to a single file)

It is a very convenient way to develop type checking extensions, however it implies a slower compilation phase, because of the compilation of the extension itself for each file being compiled. For those reasons, it can be practical to rely on a precompiled extension. You have two options to do this:

write the extension in Groovy, compile it, then use a reference to the extension class instead of the source

write the extension in Java, compile it, then use a reference to the extension class

Writing a type checking extension in Groovy is the easiest path. Basically, the idea is that the type checking extension script becomes the body of the main method of a type checking extension class, as illustrated here:

Setting up the extension is very similar to using a source form extension:

The difference is that instead of using a path in classpath, you just specify the fully qualified class name of the precompiled extension.

In case you really want to write an extension in Java, then you will not benefit from the type checking extension DSL. The extension above can be rewritten in Java this way:

It is totally possible to use the @Grab annotation in a type checking extension. This means you can include libraries that would only be available at compile time. In that case, you must understand that you would increase the time of compilation significantly (at least, the first time it grabs the dependencies).

A type checking extension is just a script that need to be on classpath. As such, you can share it as is, or bundle it in a jar file that would be added to classpath.

While you can configure the compiler to transparently add type checking extensions to your script, there is currently no way to apply an extension transparently just by having it on classpath.

Type checking extensions are used with @TypeChecked but can also be used with @CompileStatic . However, you must be aware that:

a type checking extension used with @CompileStatic will in general not be sufficient to let the compiler know how to generate statically compilable code from "unsafe" code

it is possible to use a type checking extension with @CompileStatic just to enhance type checking, that is to say introduce more compilation errors, without actually dealing with dynamic code

Let’s explain the first point, which is that even if you use an extension, the compiler will not know how to compile your code statically: technically, even if you tell the type checker what is the type of a dynamic variable, for example, it would not know how to compile it. Is it getBinding('foo') , getProperty('foo') , delegate.getFoo() ,…? There’s absolutely no direct way to tell the static compiler how to compile such code even if you use a type checking extension (that would, again, only give hints about the type).

One possible solution for this particular example is to instruct the compiler to use mixed mode compilation . The more advanced one is to use AST transformations during type checking but it is far more complex.

Type checking extensions allow you to help the type checker where it fails, but it also allows you to fail where it doesn’t. In that context, it makes sense to support extensions for @CompileStatic too. Imagine an extension that is capable of type checking SQL queries. In that case, the extension would be valid in both dynamic and static context, because without the extension, the code would still pass.

In the previous section, we highlighted the fact that you can activate type checking extensions with @CompileStatic . In that context, the type checker would not complain anymore about some unresolved variables or unknown method calls, but it would still wouldn’t know how to compile them statically.

Mixed mode compilation offers a third way, which is to instruct the compiler that whenever an unresolved variable or method call is found, then it should fall back to a dynamic mode. This is possible thanks to type checking extensions and a special makeDynamic call.

To illustrate this, let’s come back to the Robot example:

And let’s try to activate our type checking extension using @CompileStatic instead of @TypeChecked :

The script will run fine because the static compiler is told about the type of the robot variable, so it is capable of making a direct call to move . But before that, how did the compiler know how to get the robot variable? In fact by default, in a type checking extension, setting handled=true on an unresolved variable will automatically trigger a dynamic resolution, so in this case you don’t have anything special to make the compiler use a mixed mode. However, let’s slightly update our example, starting from the robot script:

Here you can notice that there is no reference to robot anymore. Our extension will not help then because we will not be able to instruct the compiler that move is done on a Robot instance. This example of code can be executed in a totally dynamic way thanks to the help of a groovy.util.DelegatingScript :

If we want this to pass with @CompileStatic , we have to use a type checking extension, so let’s update our configuration:

Then in the previous section we have learnt how to deal with unrecognized method calls, so we are able to write this extension:

If you try to execute this code, then you could be surprised that it actually fails at runtime:

The reason is very simple: while the type checking extension is sufficient for @TypeChecked , which does not involve static compilation, it is not enough for @CompileStatic which requires additional information. In this case, you told the compiler that the method existed, but you didn’t explain to it what method it is in reality, and what is the receiver of the message (the delegate).

Fixing this is very easy and just implies replacing the newMethod call with something else:

The makeDynamic call does 3 things:

it returns a virtual method just like newMethod

automatically sets the handled flag to true for you

but also marks the call to be done dynamically

So when the compiler will have to generate bytecode for the call to move , since it is now marked as a dynamic call, it will fall back to the dynamic compiler and let it handle the call. And since the extension tells us that the return type of the dynamic call is a Robot , subsequent calls will be done statically!

Some would wonder why the static compiler doesn’t do this by default without an extension. It is a design decision:

if the code is statically compiled, we normally want type safety and best performance

so if unrecognized variables/method calls are made dynamic, you loose type safety, but also all support for typos at compile time!

In short, if you want to have mixed mode compilation, it has to be explicit, through a type checking extension, so that the compiler, and the designer of the DSL, are totally aware of what they are doing.

makeDynamic can be used on 3 kind of AST nodes:

a method node ( MethodNode )

a variable ( VariableExpression )

a property expression ( PropertyExpression )

If that is not enough, then it means that static compilation cannot be done directly and that you have to rely on AST transformations.

Type checking extensions look very attractive from an AST transformation design point of view: extensions have access to context like inferred types, which is often nice to have. And an extension has a direct access to the abstract syntax tree. Since you have access to the AST, there is nothing in theory that prevents you from modifying the AST. However, we do not recommend you to do so, unless you are an advanced AST transformation designer and well aware of the compiler internals:

First of all, you would explicitly break the contract of type checking, which is to annotate, and only annotate the AST. Type checking should not modify the AST tree because you wouldn’t be able to guarantee anymore that code without the @TypeChecked annotation behaves the same without the annotation.

If your extension is meant to work with @CompileStatic , then you can modify the AST because this is indeed what @CompileStatic will eventually do. Static compilation doesn’t guarantee the same semantics at dynamic Groovy so there is effectively a difference between code compiled with @CompileStatic and code compiled with @TypeChecked . It’s up to you to choose whatever strategy you want to update the AST, but probably using an AST transformation that runs before type checking is easier.

if you cannot rely on a transformation that kicks in before the type checker, then you must be very careful

Examples of real life type checking extensions are easy to find. You can download the source code for Groovy and take a look at the TypeCheckingExtensionsTest class which is linked to various extension scripts .

An example of a complex type checking extension can be found in the Markup Template Engine source code: this template engine relies on a type checking extension and AST transformations to transform templates into fully statically compiled code. Sources for this can be found here .

- Groovy Tutorial

- Groovy - Home

- Groovy - Overview

- Groovy - Environment

- Groovy - Basic Syntax

- Groovy - Data Types

- Groovy - Variables

- Groovy - Operators

- Groovy - Loops

- Groovy - Decision Making

- Groovy - Methods

- Groovy - File I/O

- Groovy - Optionals

- Groovy - Numbers

- Groovy - Strings

- Groovy - Ranges

- Groovy - Lists

- Groovy - Maps

- Groovy - Dates & Times

- Groovy - Regular Expressions

- Groovy - Exception Handling

- Groovy - Object Oriented

- Groovy - Generics

- Groovy - Traits

- Groovy - Closures

- Groovy - Annotations

- Groovy - XML

- Groovy - JMX

- Groovy - JSON

- Groovy - DSLS

- Groovy - Database

- Groovy - Builders

- Groovy - Command Line

- Groovy - Unit Testing

- Groovy - Template Engines

- Groovy - Meta Object Programming

- Groovy Useful Resources

- Groovy - Quick Guide

- Groovy - Useful Resources

- Groovy - Discussion

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

Groovy - If/Else Statement

The next decision-making statement we will see is the if/else statement. The general form of this statement is −

The general working of this statement is that first a condition is evaluated in the if statement. If the condition is true it then executes the statements thereafter and stops before the else condition and exits out of the loop. If the condition is false it then executes the statements in the else statement block and then exits the loop. The following diagram shows the flow of the if statement.

Following is an example of a if/else statement −

In the above example, we are first initializing a variable to a value of 2. We are then evaluating the value of the variable and then deciding on which println statement should be executed. The output of the above code would be

Coding Knowledge Unveiled: Empower Yourself

Groovy Conditional Statements: Controlling Program Flow with Precision

Introduction

Conditional statements are the bedrock of any programming language, and Groovy is no exception. Groovy, with its expressive syntax and dynamic nature, provides a robust set of conditional statements to help you make decisions and control the flow of your code. In this blog post, we’ll explore Groovy’s conditional statements, including the if , if-else , and switch statements, and demonstrate how to wield their power effectively.

The if Statement in Groovy

The if statement in Groovy, as in many other programming languages, allows you to execute a block of code if a specified condition is true. The syntax is straightforward:

Here’s a simple example:

In this example, the code inside the if block will execute because the condition age >= 18 is true.

The if-else Statement

The if-else statement in Groovy extends the if statement by allowing you to specify an alternative block of code to execute if the condition is false. Here’s the syntax:

Consider this example:

In this case, because the condition score >= 70 is false, the code inside the else block will execute.

The switch Statement

The switch statement in Groovy is used when you need to evaluate multiple conditions and execute different code blocks based on the value of a variable or expression. Here’s the syntax:

Here’s an example:

In this example, the code inside the case "Monday" block executes because the value of day matches the condition.

Practical Use Cases

Conditional statements in Groovy are incredibly versatile and find applications in various scenarios, including:

- User Input Handling : Validate and respond to user input in interactive applications.

- Data Filtering : Filter data based on specific criteria in data processing tasks.

- Flow Control : Control the flow of a program to adapt to different situations.

- Error Handling : Handle exceptions gracefully with conditional error-handling routines.

- State Management : Manage the state of a program or application based on specific conditions.

Conditional statements in Groovy are indispensable tools for making decisions and responding dynamically to changing conditions in your code. By mastering the use of if , if-else , and switch statements, you can write more responsive and adaptable code, making your Groovy applications more powerful and user-friendly. Whether you’re building web applications, automation scripts, or complex software, Groovy’s conditional statements are your allies in creating intelligent and dynamic programs.

Leave a Reply Cancel reply

You must be logged in to post a comment.

IMAGES